User guide

Here we share some tips and examples on how to use the DSL to tackle common use cases.

Provided examples use JUnit 5 and AssertJ, but you can use other test & assertion libraries.

Explore the DSL in your preferred IDE to discover all available features, and consider reviewing existing tests for additional examples.

The DSL currently supports most common used cases, keeping it simple and avoiding investing development effort in features that might not be needed. If you identify any particular scenario (or JMeter feature) that you need and is not currently supported, or easy to use, please let us know by creating an issue and we will try to implement it as soon as possible. Usually porting JMeter features is quite fast.

TIP

If you like this project, please give it a star ⭐ in GitHub! This helps the project be more visible, gain relevance, and encourages us to invest more effort in new features.

For an intro to JMeter concepts and components, you can check JMeter official documentation.

Setup

To use the DSL just include it in your project:

<dependency>

<groupId>us.abstracta.jmeter</groupId>

<artifactId>jmeter-java-dsl</artifactId>

<version>1.29.1</version>

<scope>test</scope>

</dependency>

testImplementation("us.abstracta.jmeter:jmeter-java-dsl:1.29.1") {

exclude("org.apache.jmeter", "bom")

}

TIP

Here is a sample project in case you want to start one from scratch.

Simple HTTP test plan

To generate HTTP requests just use provided httpSampler.

The following example uses 2 threads (concurrent users) that send 10 HTTP GET requests each to http://my.service.

Additionally, it logs collected statistics (response times, status codes, etc.) to a file (for later analysis if needed) and checks that the response time 99 percentile is less than 5 seconds.

import static org.assertj.core.api.Assertions.assertThat;

import static us.abstracta.jmeter.javadsl.JmeterDsl.*;

import java.io.IOException;

import java.time.Duration;

import java.time.Instant;

import org.junit.jupiter.api.Test;

import us.abstracta.jmeter.javadsl.core.TestPlanStats;

public class PerformanceTest {

@Test

public void testPerformance() throws IOException {

TestPlanStats stats = testPlan(

threadGroup(2, 10,

httpSampler("http://my.service")

),

//this is just to log details of each request stats

jtlWriter("target/jtls")

).run();

assertThat(stats.overall().sampleTimePercentile99()).isLessThan(Duration.ofSeconds(5));

}

}

TIP

When working with multiple samplers in a test plan, specify their names (eg: httpSampler("home", "http://my.service")) to easily check their respective statistics.

TIP

Set connection and response timeouts to avoid potential execution differences when running test plan in different machines. Here are more details.

TIP

Since JMeter uses log4j2, if you want to control the logging level or output, you can use something similar to this log4j2.xml.

TIP

Keep in mind that you can use Java programming to modularize and create abstractions which allow you to build complex test plans that are still easy to read, use and maintain. Here is an example of some complex abstraction built using Java features and the DSL.

Check HTTP performance testing for additional details while testing HTTP services.

DSL recorder

When creating test plans you can rely just on the IDE or you can use provided recorder.

Here is a small demo using it:

TIP

You can use jbang to easily execute the recorder with the latest version available. E.g.:

jbang us.abstracta.jmeter:jmeter-java-dsl-cli:1.29.1 recorder http://retailstore.test

TIP

Use java -jar jmdsl.jar help recorder to see the list of options to customize your recording.

TIP

In general use ---url-includes to ignore URLs that are not relevant to the performance test.

WARNING

Unlike the rest of JMeter DSL, which is compiled with Java 8, jmdsl.jar and us.abstracta.jmeter:jmeter-java-dsl-cli are compiled with Java 11 due to some dependencies requirement (latest Selenium drivers mainly).

So, to run above commands, you will need Java 11 or newer.

Correlations

To avoid fragile test plans with fixed values in request parameters, the DSL recorder, through the usage of the JMeter Correlation Recorder Plugin, allows you to define correlation rules.

Correlation rules define regular expressions, which allow the recorder to automatically add regexExtractor and replace occurrences of extracted values in following requests with proper variable references.

For example, for the same scenario previously shown, and using --config option (which makes correlation rules easier to maintain) with following file:

recorder:

url: http://retailstore.test

urlIncludes:

- retailstore.test.*

correlations:

- variable: productId

extractor: name="productId" value="([^"]+)"

replacement: productId=(.*)

We get this test plan:

///usr/bin/env jbang "$0" "$@" ; exit $?

/*

These commented lines make the class executable if you have jbang installed by making the file

executable (eg: chmod +x ./PerformanceTest.java) and just executing it with ./PerformanceTest.java

*/

//DEPS org.assertj:assertj-core:3.23.1

//DEPS org.junit.jupiter:junit-jupiter-engine:5.9.1

//DEPS org.junit.platform:junit-platform-launcher:1.9.1

//DEPS us.abstracta.jmeter:jmeter-java-dsl:1.29.1

import static org.assertj.core.api.Assertions.assertThat;

import static us.abstracta.jmeter.javadsl.JmeterDsl.*;

import java.io.IOException;

import java.io.PrintWriter;

import java.nio.charset.StandardCharsets;

import org.apache.http.entity.ContentType;

import org.apache.jmeter.protocol.http.util.HTTPConstants;

import org.junit.jupiter.api.Test;

import org.junit.platform.engine.discovery.DiscoverySelectors;

import org.junit.platform.launcher.core.LauncherDiscoveryRequestBuilder;

import org.junit.platform.launcher.core.LauncherFactory;

import org.junit.platform.launcher.listeners.SummaryGeneratingListener;

import org.junit.platform.launcher.listeners.TestExecutionSummary;

import us.abstracta.jmeter.javadsl.core.TestPlanStats;

public class PerformanceTest {

@Test

public void test() throws IOException {

TestPlanStats stats = testPlan(

threadGroup(1, 1,

httpDefaults()

.encoding(StandardCharsets.UTF_8),

httpSampler("/-1", "http://retailstore.test"),

httpSampler("/home-3", "http://retailstore.test/home")

.children(

regexExtractor("productId#2", "name=\"productId\" value=\"([^\"]+)\"")

.defaultValue("productId#2_NOT_FOUND")

),

httpSampler("/cart-16", "http://retailstore.test/cart")

.method(HTTPConstants.POST)

.contentType(ContentType.APPLICATION_FORM_URLENCODED)

.rawParam("productId", "${productId#2}"),

httpSampler("/cart-17", "http://retailstore.test/cart")

)

).run();

assertThat(stats.overall().errorsCount()).isEqualTo(0);

}

/*

This method is only included to make the test class self-executable. You can remove it when

executing tests with maven, gradle, or some other tool.

*/

public static void main(String[] args) {

SummaryGeneratingListener summaryListener = new SummaryGeneratingListener();

LauncherFactory.create()

.execute(LauncherDiscoveryRequestBuilder.request()

.selectors(DiscoverySelectors.selectClass(PerformanceTest.class))

.build(),

summaryListener);

TestExecutionSummary summary = summaryListener.getSummary();

summary.printFailuresTo(new PrintWriter(System.err));

System.exit(summary.getTotalFailureCount() > 0 ? 1 : 0);

}

}

In this test plan you can see an already added an extractor and the usage of extracted value in a subsequent request (as a variable reference).

TIP

To identify potential correlations, you can check in request parameters or URLs with fixed values and then, check the automatically created recording .jtl file (by default in target/recording folder) to identify proper regular expression for extraction.

We have ideas to ease this for the future, but, if you have ideas, or just want to give more priority to improving this, please create an issue in the repository to let us know.

TIP

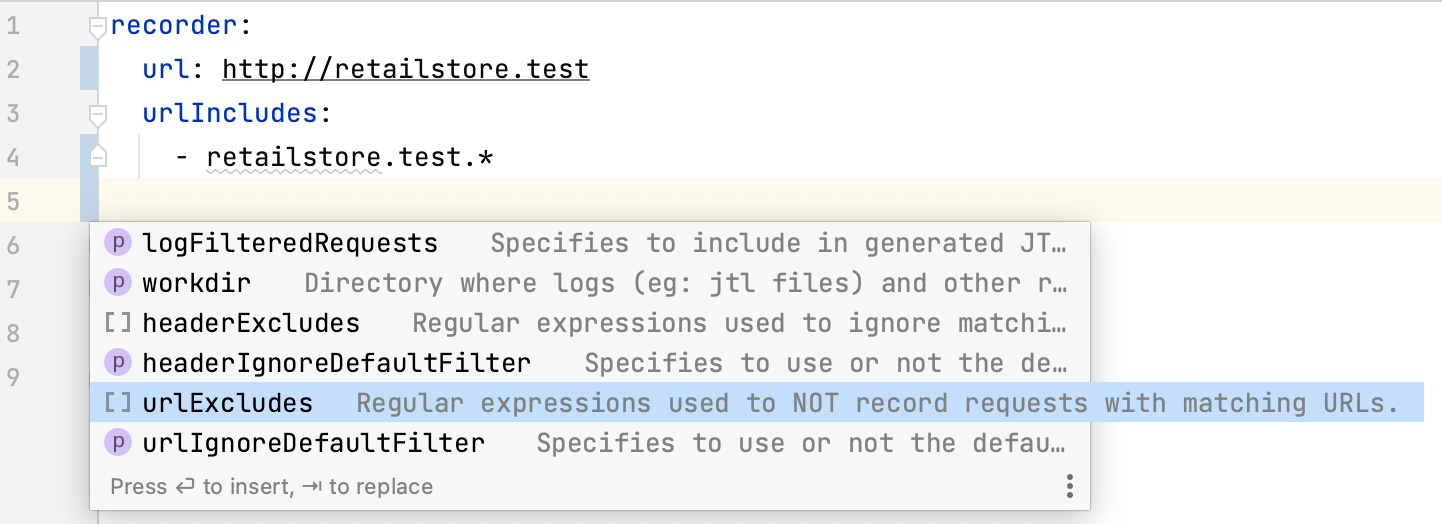

When using --config, take advantage of your IDEs auto-completion and inline documentation capabilities by using .jmdsl.yml suffix in config file names.

Here is a screenshot of autocompletion in action:

DSL code generation from JMX file

To ease migrating existing JMeter test plans and ease learning about DSL features, the DSL provides jmx2dsl cli command (download the latest cli version from releases page or use jbang) command line tool which you can use to generate DSL code from existing JMX files.

As an example:

java -jar jmdsl.jar jmx2dsl test-plan.jmx

jbang us.abstracta.jmeter:jmeter-java-dsl-cli:1.29.1 jmx2dsl test-plan.jmx

Could generate something like the following output:

///usr/bin/env jbang "$0" "$@" ; exit $?

/*

These commented lines make the class executable if you have jbang installed by making the file

executable (eg: chmod +x ./PerformanceTest.java) and just executing it with ./PerformanceTest.java

*/

//DEPS org.assertj:assertj-core:3.23.1

//DEPS org.junit.jupiter:junit-jupiter-engine:5.9.1

//DEPS org.junit.platform:junit-platform-launcher:1.9.1

//DEPS us.abstracta.jmeter:jmeter-java-dsl:1.29.1

import static org.assertj.core.api.Assertions.assertThat;

import static us.abstracta.jmeter.javadsl.JmeterDsl.*;

import java.io.IOException;

import java.io.PrintWriter;

import org.junit.jupiter.api.Test;

import org.junit.platform.engine.discovery.DiscoverySelectors;

import org.junit.platform.launcher.core.LauncherDiscoveryRequestBuilder;

import org.junit.platform.launcher.core.LauncherFactory;

import org.junit.platform.launcher.listeners.SummaryGeneratingListener;

import org.junit.platform.launcher.listeners.TestExecutionSummary;

import us.abstracta.jmeter.javadsl.core.TestPlanStats;

public class PerformanceTest {

@Test

public void test() throws IOException {

TestPlanStats stats = testPlan(

threadGroup(2, 10,

httpSampler("http://my.service")

),

jtlWriter("target/jtls")

).run();

assertThat(stats.overall().errorsCount()).isEqualTo(0);

}

/*

This method is only included to make the test class self-executable. You can remove it when

executing tests with maven, gradle, or some other tool.

*/

public static void main(String[] args) {

SummaryGeneratingListener summaryListener = new SummaryGeneratingListener();

LauncherFactory.create()

.execute(LauncherDiscoveryRequestBuilder.request()

.selectors(DiscoverySelectors.selectClass(PerformanceTest.class))

.build(),

summaryListener);

TestExecutionSummary summary = summaryListener.getSummary();

summary.printFailuresTo(new PrintWriter(System.err));

System.exit(summary.getTotalFailureCount() > 0 ? 1 : 0);

}

}

WARNING

Unlike the rest of JMeter DSL which is compiled with Java 8, jmdsl.jar and us.abstracta.jmeter:jmeter-java-dsl-cli are compiled with Java 11 due to some dependencies requirement (latest Selenium drivers mainly).

So, to run above commands, you will need Java 11 or newer.

TIP

Review and try generated code before executing it as is. I.e: tune thread groups and iterations to 1 to give it a try.

TIP

Always review generated DSL code. You should add proper assertions to it, might want to clean it up, add to your maven or gradle project dependencies listed on initial comments of generated code, modularize it better, check that conversion is accurate according to DSL, or even propose improvements for it in the GitHub repository.

TIP

Conversions can always be improved, and since there are many combinations and particular use cases, different semantics, etc, getting a perfect conversion for every scenario can get tricky.

If you find any potential improvement to code generation, please help us by creating an issue or discussion in GitHub repository.

Run test at scale

Running a load test from one machine is not always enough, since you are limited to the machine's hardware capabilities. Sometimes, is necessary to run the test using a cluster of machines to be able to generate enough load for the system under test.

BlazeMeter

By including the following module as a dependency:

<dependency>

<groupId>us.abstracta.jmeter</groupId>

<artifactId>jmeter-java-dsl-blazemeter</artifactId>

<version>1.29.1</version>

<scope>test</scope>

</dependency>

testImplementation 'us.abstracta.jmeter:jmeter-java-dsl-blazemeter:1.29.1'

You can easily run a JMeter test plan at scale in BlazeMeter like this:

import static org.assertj.core.api.Assertions.assertThat;

import static us.abstracta.jmeter.javadsl.JmeterDsl.*;

import java.time.Duration;

import org.junit.jupiter.api.Test;

import us.abstracta.jmeter.javadsl.blazemeter.BlazeMeterEngine;

import us.abstracta.jmeter.javadsl.core.TestPlanStats;

public class PerformanceTest {

@Test

public void testPerformance() throws Exception {

TestPlanStats stats = testPlan(

// number of threads and iterations are in the end overwritten by BlazeMeter engine settings

threadGroup(2, 10,

httpSampler("http://my.service")

)

).runIn(new BlazeMeterEngine(System.getenv("BZ_TOKEN"))

.testName("DSL test")

.totalUsers(500)

.holdFor(Duration.ofMinutes(10))

.threadsPerEngine(100)

.testTimeout(Duration.ofMinutes(20)));

assertThat(stats.overall().sampleTimePercentile99()).isLessThan(Duration.ofSeconds(5));

}

}

This test is using

BZ_TOKEN, a custom environment variable with<KEY_ID>:<KEY_SECRET>format, to get the BlazeMeter API authentication credentials.

Note that is as simple as generating a BlazeMeter authentication token and adding .runIn(new BlazeMeterEngine(...)) to any existing jmeter-java-dsl test to get it running at scale in BlazeMeter.

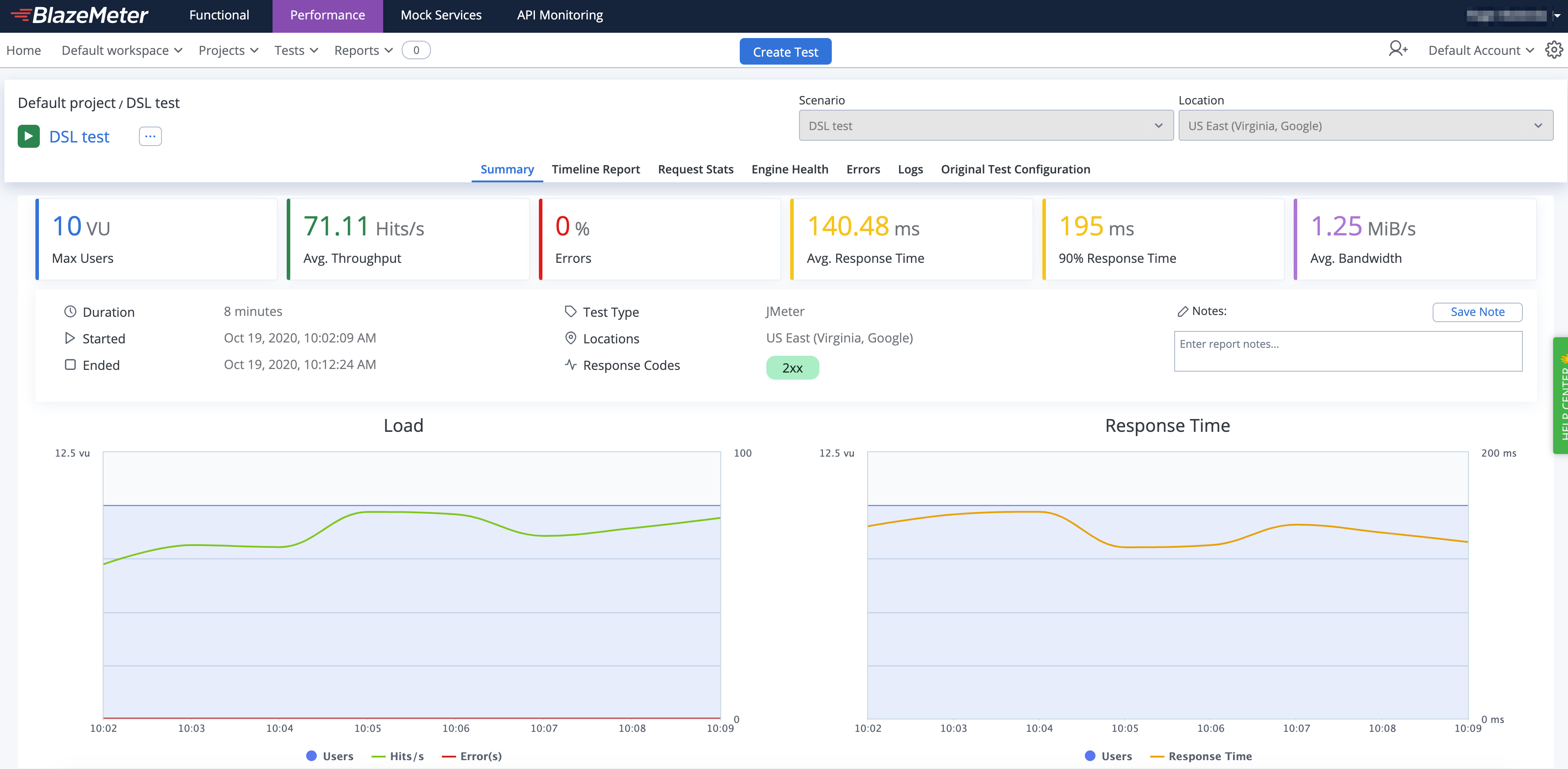

BlazeMeter will not only allow you to run the test at scale but also provides additional features like nice real-time reporting, historic data tracking, etc. Here is an example of how a test would look in BlazeMeter:

Check BlazeMeterEngine for details on usage and available settings when running tests in BlazeMeter.

WARNING

By default the engine is configured to timeout if test execution takes more than 1 hour. This timeout exists to avoid any potential problem with BlazeMeter execution not detected by the client, and avoid keeping the test indefinitely running until is interrupted by a user, which may incur in unnecessary expenses in BlazeMeter and is specially annoying when running tests in automated fashion, for example in CI/CD. It is strongly advised to set this timeout properly in each run, according to the expected test execution time plus some additional margin (to consider for additional delays in BlazeMeter test setup and teardown) to avoid unexpected test plan execution failure (due to timeout) or unnecessary waits when there is some unexpected issue with BlazeMeter execution.

WARNING

BlazeMeterEngine always returns 0 as sentBytes statistics since there is no efficient way to get it from BlazMeter.

TIP

BlazeMeterEngine will automatically upload to BlazeMeter files used in csvDataSet and httpSampler with bodyFile or bodyFilePart methods.

For example this test plan works out of the box (no need for uploading referenced files or adapt test plan):

testPlan(

threadGroup(100, Duration.ofMinutes(5),

csvDataSet(new TestResource("users.csv")),

httpSampler(SAMPLE_LABEL, "https://myservice/users/${USER}")

)

).runIn(new BlazeMeterEngine(System.getenv("BZ_TOKEN"))

.testTimeout(Duration.ofMinutes(10)));

If you need additional files to be uploaded to BlazeMeter, you can easily specify them with the BlazemeterEngine.assets() method.

TIP

By default BlazeMeterEngine will run tests from default location (most of the times us-east4-a). But in some scenarios you might want to change the location, or even run the test from multiple locations.

Here is an example how you can easily set this up:

testPlan(

threadGroup(300, Duration.ofMinutes(5), // 300 total users for 5 minutes

httpSampler(SAMPLE_LABEL, "https://myservice")

)

).runIn(new BlazeMeterEngine(System.getenv("BZ_TOKEN"))

.location(BlazeMeterLocation.GCP_SAO_PAULO, 30) // 30% = 90 users will run in Google Cloud Platform at Sao Paulo

.location("MyPrivateLocation", 70) // 70% = 210 users will run in MyPrivateLocation named private location

.testTimeout(Duration.ofMinutes(10)));

TIP

In case you want to get debug logs for HTTP calls to BlazeMeter API, you can include the following setting to an existing log4j2.xml configuration file:

<Logger name="us.abstracta.jmeter.javadsl.blazemeter.BlazeMeterClient" level="DEBUG"/>

<Logger name="okhttp3" level="DEBUG"/>

WARNING

If you use test elements (JSR223 elements, httpSamplers, ifController or whileController) with Java lambdas instead of strings, check this section of the user guide to use them while running test plan in BlazeMeter.

OctoPerf

In the same fashion as with BlazeMeter, just by including the following module as a dependency:

<dependency>

<groupId>us.abstracta.jmeter</groupId>

<artifactId>jmeter-java-dsl-octoperf</artifactId>

<version>1.29.1</version>

<scope>test</scope>

</dependency>

testImplementation 'us.abstracta.jmeter:jmeter-java-dsl-octoperf:1.29.1'

You can easily run a JMeter test plan at scale in OctoPerf like this:

import static org.assertj.core.api.Assertions.assertThat;

import static us.abstracta.jmeter.javadsl.JmeterDsl.*;

import java.time.Duration;

import org.junit.jupiter.api.Test;

import us.abstracta.jmeter.javadsl.octoperf.OctoPerfEngine;

import us.abstracta.jmeter.javadsl.core.TestPlanStats;

public class PerformanceTest {

@Test

public void testPerformance() throws Exception {

TestPlanStats stats = testPlan(

// number of threads and iterations are in the end overwritten by OctoPerf engine settings

threadGroup(2, 10,

httpSampler("http://my.service")

)

).runIn(new OctoPerfEngine(System.getenv("OCTOPERF_API_KEY"))

.projectName("DSL test")

.totalUsers(500)

.rampUpFor(Duration.ofMinutes(1))

.holdFor(Duration.ofMinutes(10))

.testTimeout(Duration.ofMinutes(20)));

assertThat(stats.overall().sampleTimePercentile99()).isLessThan(Duration.ofSeconds(5));

}

}

This test is using

OCTOPERF_API_KEY, a custom environment variable containing an OctoPerf API key.

Note that, as with the BlazeMeter case, it is as simple as getting the OctoPerf API key and adding .runIn(new OctoPerfEngine(...)) to any existing jmeter-java-dsl test to get it running at scale in OctoPerf.

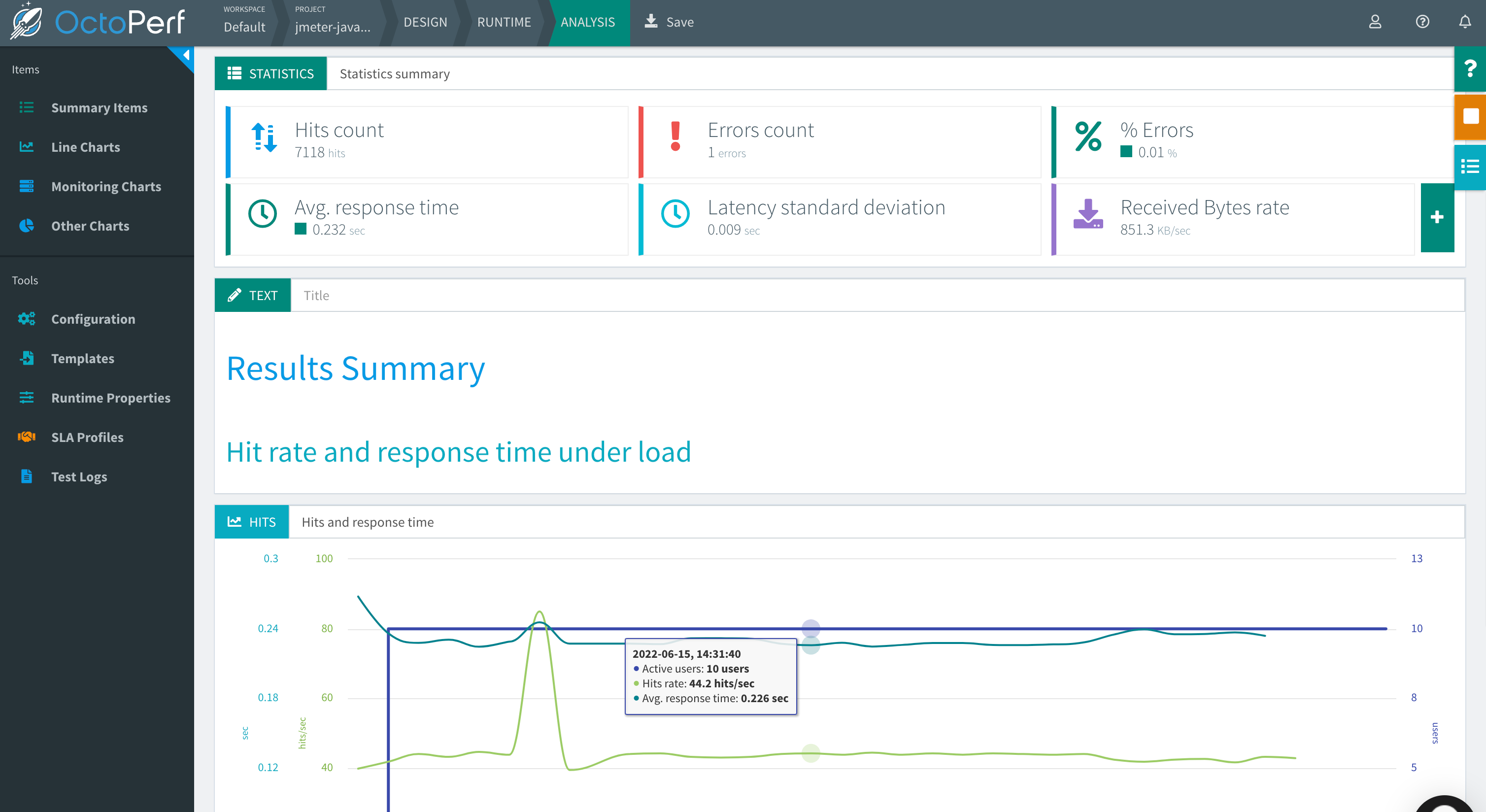

As with the BlazeMeter case, with OctoPerf you can not only run the test at scale but also get additional features like nice real-time reporting, historic data tracking, etc. Here is an example of how a test looks like in OctoPerf:

Check OctoPerfEngine for details on usage and available settings when running tests in OctoPerf.

WARNING

To avoid piling up virtual users and scenarios in OctoPerf project, OctoPerfEngine deletes any OctoPerfEngine previously created entities (virtual users and scenarios with jmeter-java-dsl tag) in the project.

It is very important that you use different project names for different projects to avoid interference (parallel execution of two jmeter-java-dsl projects).

If you want to disable this automatic cleanup, you can use the existing OctoPerfEngine method .projectCleanUp(false).

TIP

In case you want to get debug logs for HTTP calls to OctoPerf API, you can include the following setting to an existing log4j2.xml configuration file:

<Logger name="us.abstracta.jmeter.javadsl.octoperf.OctoPerfClient" level="DEBUG"/>

<Logger name="okhttp3" level="DEBUG"/>

WARNING

There is currently no built-in support for test elements with Java lambdas in OctoPerfEngine (as there is for BlazeMeterEngine). If you need it, please request it by creating a GitHub issue.

WARNING

By default the engine is configured to timeout if test execution takes more than 1 hour. This timeout exists to avoid any potential problem with OctoPerf execution not detected by the client, and avoid keeping the test indefinitely running until is interrupted by a user, which is specially annoying when running tests in automated fashion, for example in CI/CD. It is strongly advised to set this timeout properly in each run, according to the expected test execution time plus some additional margin (to consider for additional delays in OctoPerf test setup and teardown) to avoid unexpected test plan execution failure (due to timeout) or unnecessary waits when there is some unexpected issue with OctoPerf execution.

Azure Load Testing

To use Azure Load Testing to execute your test plans at scale, is as easy as including the following module as a dependency:

<dependency>

<groupId>us.abstracta.jmeter</groupId>

<artifactId>jmeter-java-dsl-azure</artifactId>

<version>1.29.1</version>

<scope>test</scope>

</dependency>

testImplementation 'us.abstracta.jmeter:jmeter-java-dsl-azure:1.29.1'

And using the provided engine like this:

import static org.assertj.core.api.Assertions.assertThat;

import static us.abstracta.jmeter.javadsl.JmeterDsl.*;

import java.time.Duration;

import org.junit.jupiter.api.Test;

import us.abstracta.jmeter.javadsl.azure.AzureEngine;

import us.abstracta.jmeter.javadsl.core.TestPlanStats;

public class PerformanceTest {

@Test

public void testPerformance() throws Exception {

TestPlanStats stats = testPlan(

threadGroup(2, 10,

httpSampler("http://my.service")

)

).runIn(new AzureEngine(System.getenv("AZURE_CREDS")) // AZURE_CREDS=tenantId:clientId:secretId

.testName("dsl-test")

/*

This specifies the number of engine instances used to execute the test plan.

In this case means that it will run 2(threads in thread group)x2(engines)=4 concurrent users/threads in total.

Each engine executes the test plan independently.

*/

.engines(2)

.testTimeout(Duration.ofMinutes(20)));

assertThat(stats.overall().sampleTimePercentile99()).isLessThan(Duration.ofSeconds(5));

}

}

This test is using

AZURE_CREDS, a custom environment variable containingtenantId:clientId:clientSecretwith proper values for each. Check in Azure Portal tenant properties the proper tenant ID for your subscription, and follow this guide to register an application with proper permissions and secrets generation for tests execution.

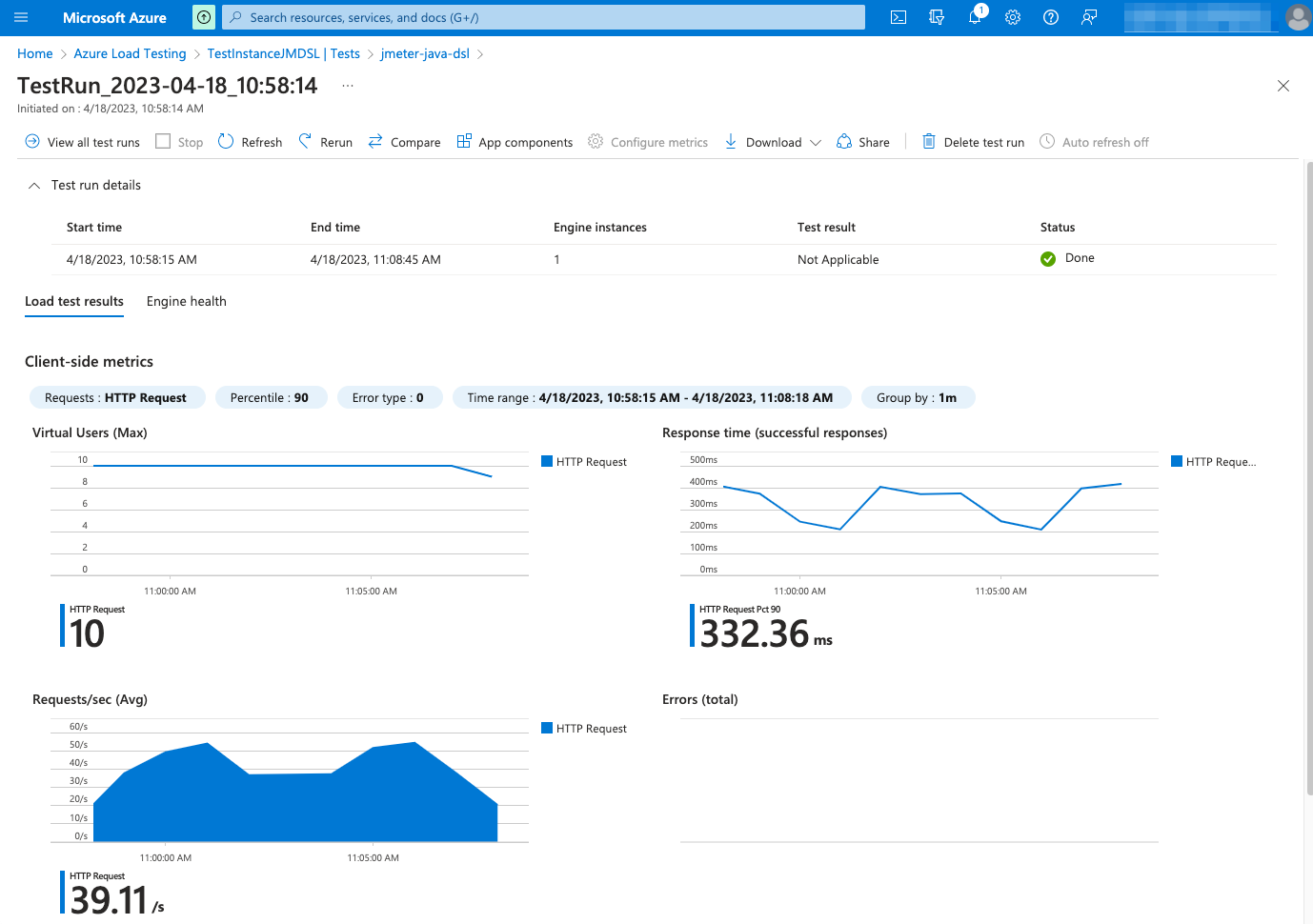

As with the BlazeMeter and OctoPerf, you can not only run the test at scale but also get additional features like nice real-time reporting, historic data tracking, etc. Here is an example of how a test looks like in Azure Load Testing:

Check AzureEngine for details on usage and available settings when running tests in Azure Load Testing.

TIP

AzureEngine will automatically upload to Azure Load Testing files used in csvDataSet and httpSampler with bodyFile or bodyFilePart methods.

For example this test plan works out of the box (no need for uploading referenced files or adapt test plan):

testPlan(

threadGroup(100, Duration.ofMinutes(5),

csvDataSet(new TestResource("users.csv")),

httpSampler(SAMPLE_LABEL, "https://myservice/users/${USER}")

)

).runIn(new AzureEngine(System.getenv("BZ_TOKEN"))

.testTimeout(Duration.ofMinutes(10)));

If you need additional files to be uploaded to Azure Load Testing, you can easily specify them with the AzureEngine.assets() method.

TIP

If you use a csvDataSet and multiple Azure engines (through the engines() method) and want to split provided CSVs between the Azure engines, as to not generate same requests from each engine, then you can use splitCsvsBetweenEngines.

TIP

If you want to correlate test runs to other entities (like a CI/CD job id, product version release, git commit, etc) you can add such information in the test run name by using the testRunName() method.

TIP

To get a full view in Azure Load Testing test run execution report not only of the performance test collected metrics, but also metrics from the application components under test, you can register all the application components using the monitoredResources() method.

monitoredResources() requires a list of resources ids, which you can get by navigating in Azure portal to the correct resource, and then copy part of the url from the browser. For example, a resource id for a container app looks like /subscriptions/my-subscription-id/resourceGroups/my-resource-group/providers/Microsoft.App/containerapps/my-papp.

TIP

As with BlazeMeter and OctoPerf cases, if you want to get debug logs for HTTP calls to Azure API, you can include the following setting to an existing log4j2.xml configuration file:

<Logger name="us.abstracta.jmeter.javadsl.azure.AzureClient" level="DEBUG"/>

<Logger name="okhttp3" level="DEBUG"/>

WARNING

There is currently no built-in support for test elements with Java lambdas in AzureEngine (as there is for BlazeMeterEngine). If you need it, please request it by creating a GitHub issue.

WARNING

By default the engine is configured to timeout if test execution takes more than 1 hour. This timeout exists to avoid any potential problem with Azure Load Testing execution not detected by the client, and avoid keeping the test indefinitely running until is interrupted by a user, which may incur in unnecessary expenses in Azure and is specially annoying when running tests in automated fashion, for example in CI/CD. It is strongly advised to set this timeout properly in each run, according to the expected test execution time plus some additional margin (to consider for additional delays in Azure Load Testing test setup and teardown) to avoid unexpected test plan execution failure (due to timeout) or unnecessary waits when there is some unexpected issue with Azure Load Testing execution.

JMeter remote testing

JMeter already provides means to run a test on several machines controlled by one master/client machine. This is referred as Remote Testing.

JMeter remote testing requires setting up nodes in server/slave mode (using bin/jmeter-server JMeter script) with a configured keystore (usually rmi_keystore.jks, generated with bin/ JMeter script) which will execute a test plan triggered in a client/master node.

You can trigger such tests with the DSL using DistributedJmeterEngine as in the following example:

import static org.assertj.core.api.Assertions.assertThat;

import static us.abstracta.jmeter.javadsl.JmeterDsl.*;

import java.time.Duration;

import org.junit.jupiter.api.Test;

import us.abstracta.jmeter.javadsl.core.engines.DistributedJmeterEngine;

import us.abstracta.jmeter.javadsl.core.TestPlanStats;

public class PerformanceTest {

@Test

public void testPerformance() throws Exception {

TestPlanStats stats = testPlan(

threadGroup(200, Duration.ofMinutes(10),

httpSampler("http://my.service")

)

).runIn(new DistributedJmeterEngine("host1", "host2"));

assertThat(stats.overall().sampleTimePercentile99()).isLessThan(Duration.ofSeconds(5));

}

}

This will run 200 users for 10 minutes on each server/slave (host1 and host2) and aggregate all the results in returned stats.

WARNING

Use same version used by JMeter DSL when setting up the cluster to avoid any potential issues.

For instance, JMeter 5.6 has introduced some changes that currently break some plugins using by JMeter DSL, or change default behavior for test plans.

To find out the current version of JMeter DSL you can check JMeter jars version in your project dependency tree. E.g.:

mvn dependency:tree -Dincludes=org.apache.jmeter:ApacheJMeter_core

Or check JMeter DSL pom.xml property jmeter.version.

WARNING

To be able to run the test you require the rmi_keystore.jks file in the working directory of the test. For the time being, we couldn't find a way to allow setting any arbitrary path for the file.

WARNING

In general, prefer using BlazeMeter, OctoPerf or Azure options which avoid all the setup and maintenance costs of the infrastructure required by JMeter remote testing, also benefiting from other additional useful features they provide (like reporting capabilities).

TIP

Here is an example project using docker-compose that starts a JMeter server/slave and executes a test with it. If you want to do a similar setup, generate your own keystore and properly tune RMI remote server in server/slave.

Check DistributedJmeterEngine and JMeter documentation for proper setup and additional options.

Auto Stop

As previously shown, it is quite easy to check after test plan execution if the collected metrics are the expected ones and fail/pass the test accordingly.

But, what if you want to stop your test plan as soon as the metrics deviate from expected ones? This could help avoiding unnecessary resource usage, especially when conducting tests at scale to avoid incurring additional costs.

With JMeter DSL you can easily define auto-stop conditions over collected metrics, that when met will stop the test plan and throw an exception that will make your test fail.

Here is an example:

import static us.abstracta.jmeter.javadsl.JmeterDsl.*;

import static us.abstracta.jmeter.javadsl.core.listeners.AutoStopListener.AutoStopCondition.*;

import java.io.IOException;

import java.time.Duration;

import org.junit.jupiter.api.Test;

import us.abstracta.jmeter.javadsl.core.TestPlanStats;

public class PerformanceTest {

@Test

public void testPerformance() throws IOException {

TestPlanStats stats = testPlan(

threadGroup(2, Duration.ofMinutes(1),

httpSampler("http://my.service")

),

autoStop()

.when(errors().total().greaterThan(0)) // when any sample fails, then test plan will stop and an exception will be thrown pointing to this condition.

).run();

}

}

Check AutoStopListener for details on available options for auto-stop conditions.

autoStop is inspired in JMeter AutoStop Plugin, but provides a lot more flexibility.

TIP

autoStop will only consider samples within its scope.

If you place it as a test plan child, then it will evaluate metrics for all samples. If you place it as a thread group child, then it will evaluate metrics for samples of such thread group. If you place it as a controller child, then only samples within such controller. And, if you place it as a sampler child, it will only evaluate samples for that particular sampler.

Additionally, you can use the samplesMatching(regex) method to only evaluate metrics for a subset of samples within a given scope (eg: all samples with a label starting with users).

TIP

You can add multiple autoStop elements within a test plan. The first one containing a condition that is met will trigger the auto-stop.

To identify which autoStop element triggered, you can specify a name, like autoStop("login"), and the associated name will be included in the exception thrown by autoStop when the test plan is stopped.

Additionally, you can specify several conditions on an autoStop element. When any of such conditions are met, then the test plan is stopped.

TIP

By default, autoStop will evaluate each condition for each sample and stop the test plan as soon as a condition is met.

This behavior is different from JMeter AutoStop Plugin, which evaluates and resets aggregations (it only provides average aggregation) for every second.

To change this behavior you can use the every(Duration) method (after specifying the aggregation method, eg errors().perSecond().every(Duration.ofSeconds(5)))) to specify that the condition should only be evaluated, and the aggregation reset, for every given period.

This is particularly helpful for some aggregations (like mean, perSecond, and percent) which may get "stuck" due to historical values collected for the metric.

As an example to illustrate this issue, consider the scenario where after 10 minutes you get 10k requests with an average sample time of 1 second, but in the last 10 seconds you get 10 requests with an average of 10 seconds. In this scenario, the general average will not be much affected by the last seconds, but you would in any case want to stop the test plan since last seconds average has been way up the expected value. This is a clear scenario where you would like to use the every() method.

TIP

By default, autoStop will stop the test plan as soon as the condition is met, but in many cases it is better to wait for the condition to be met for some period of time, to avoid some intermittent or short-lived condition. To not stop the test plan until the condition holds for a given period of time, you can use holdsFor(Duration) at the end of your condition.

WARNING

autoStop will automatically work with AzureEngine. But no support has been implemented yet for BlazeMeterEngine or OctoPerfEngine. If you need such support, please create an issue in the GitHub repository.

Advanced threads configuration

jmeter-java-dsl provides two simple ways of creating thread groups which are used in most scenarios:

- specifying threads and the number of iterations each thread should execute before ending the test plan

- specifying threads and duration for which each thread should execute before the test plan ends

This is how they look in code:

threadGroup(10, 20, ...) // 10 threads for 20 iterations each

threadGroup(10, Duration.ofSeconds(20), ...) // 10 threads for 20 seconds each

But these options are not good when working with many threads or when trying to configure some complex test scenarios (like when doing incremental or peak tests).

Thread ramps and holds

When working with many threads, it is advisable to configure a ramp-up period, to avoid starting all threads at once affecting performance metrics and generation.

You can easily configure a ramp-up with the DSL like this:

threadGroup().rampTo(10, Duration.ofSeconds(5)).holdIterating(20) // ramp to 10 threads for 5 seconds (1 thread every half second) and iterating each thread 20 times

threadGroup().rampToAndHold(10, Duration.ofSeconds(5), Duration.ofSeconds(20)) //similar as above but after ramping up holding execution for 20 seconds

Additionally, you can use and combine these same methods to configure more complex scenarios (incremental, peak, and any other types of tests) like the following one:

threadGroup()

.rampToAndHold(10, Duration.ofSeconds(5), Duration.ofSeconds(20))

.rampToAndHold(100, Duration.ofSeconds(10), Duration.ofSeconds(30))

.rampTo(200, Duration.ofSeconds(10))

.rampToAndHold(100, Duration.ofSeconds(10), Duration.ofSeconds(30))

.rampTo(0, Duration.ofSeconds(5))

.children(

httpSampler("http://my.service")

)

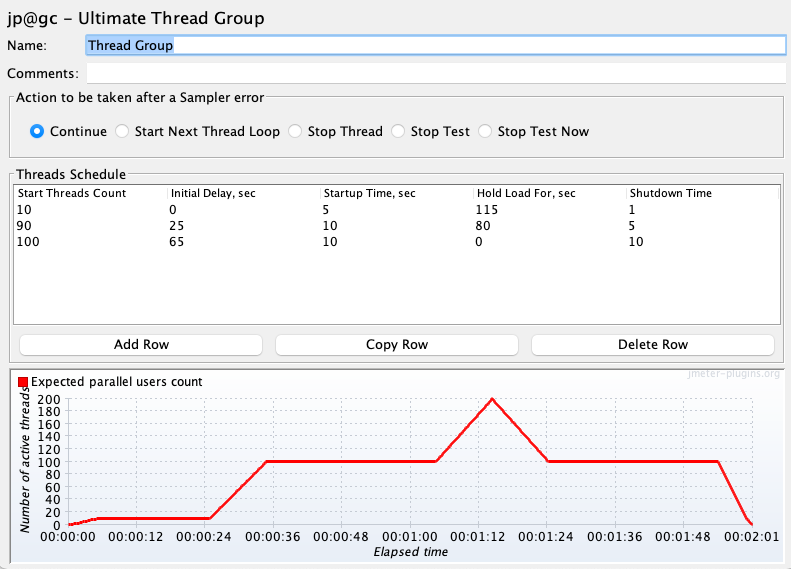

Which would translate into the following threads' timeline:

Check DslDefaultThreadGroup for more details.

TIP

To visualize the threads timeline, for complex thread group configurations like the previous one, you can get a chart like the previous one by using provided DslThreadGroup.showTimeline() method.

TIP

If you are a JMeter GUI user, you may even be interested in using provided TestElement.showInGui() method, which shows the JMeter test element GUI that could help you understand what will DSL execute in JMeter. You can use this method with any test element generated by the DSL (not just thread groups).

For example, for the above test plan you would get a window like the following one:

TIP

When using multiple thread groups in a test plan, consider setting a name (eg: threadGroup("main", 1, 1, ...)) on them to properly identify associated requests in statistics & jtl results.

Throughput based thread group

Sometimes you want to focus just on the number of requests per second to generate and don't want to be concerned about how many concurrent threads/users, and pauses between requests, are needed. For these scenarios you can use rpsThreadGroup like in the following example:

rpsThreadGroup()

.maxThreads(500)

.rampTo(20, Duration.ofSeconds(10))

.rampTo(10, Duration.ofSeconds(10))

.rampToAndHold(1000, Duration.ofSeconds(5), Duration.ofSeconds(10))

.children(

httpSampler("http://my.service")

)

This will internally use JMeter Concurrency Thread Group element in combination with Throughput Shaping Time.

TIP

rpsThreadGroup will dynamically create and remove threads and add delays between requests to match the traffic to the expected RPS. You can also specify to control iterations per second (the number of times the flow in the thread group runs per second) instead of threads by using .counting(RpsThreadGroup.EventType.ITERATIONS).

WARNING

RPS values control how often to adjust threads and waits. Avoid too low (eg: under 1) values which can cause big waits and don't match the expected RPS.

JMeter Throughput Shaping Timer calculates each time the delay to be used not taking into consideration future expected RPS. For instance, if you configure 1 thread with a ramp from 0.01 to 10 RPS with 10 seconds duration, when 1 request is sent it will calculate that to match 0.01 RPS has to wait requestsCount/expectedRPS = 1/0.01 = 100 seconds, which would keep the thread stuck for 100 seconds when in fact should have done two additional requests after waiting 1 second (to match the ramp). Setting this value greater or equal to 1 will assure at least 1 evaluation every second.

WARNING

When no maxThreads are specified, rpsThreadGroup will use as many threads as needed. In such scenarios, you might face an unexpected number of threads with associated CPU and Memory requirements, which may affect the performance test metrics. You should always set maximum threads to use to avoid such scenarios.

You can use the following formula to calculate a value for maxThreads: T*R, being T the maximum RPS that you want to achieve and R the maximum expected response time (or iteration time if you use .counting(RpsThreadGroup.EventType.ITERATIONS)) in seconds.

TIP

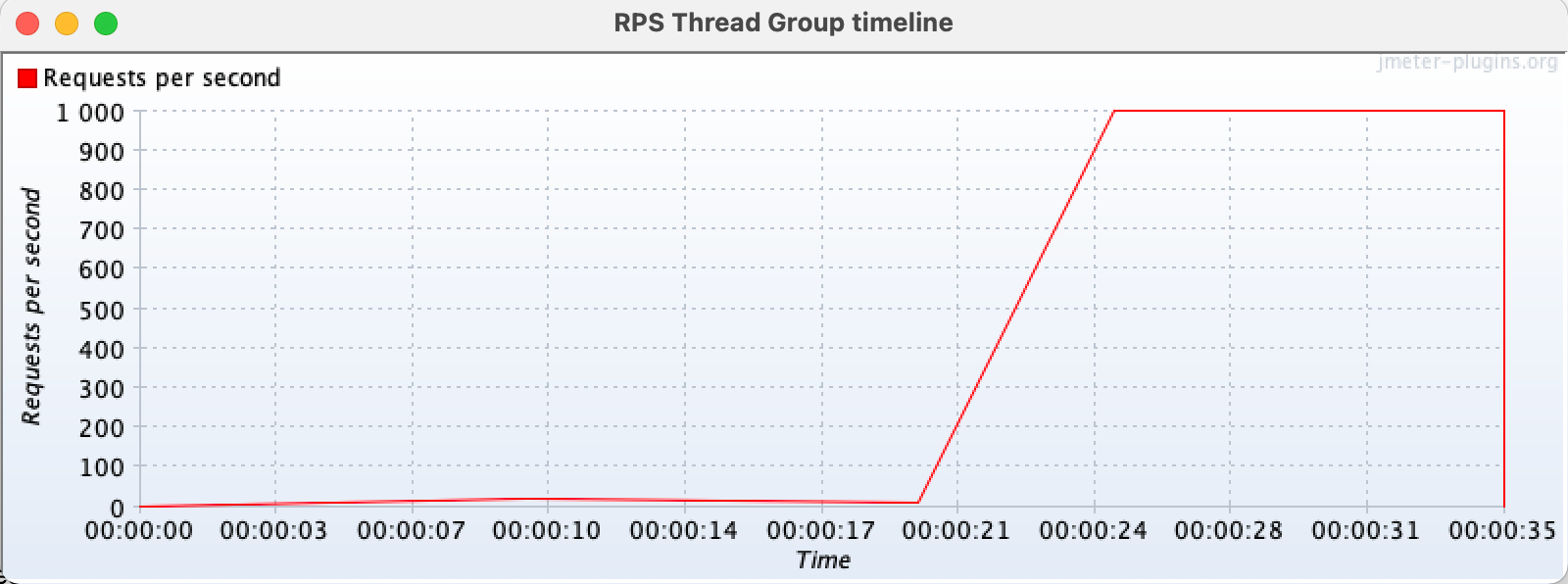

As with the default thread group, with rpsThreadGroup you can use showTimeline to get a chart of configured RPS profile for easy visualization. An example chart:

Check RpsThreadGroup for more details.

Set up & tear down

When you need to run some custom logic before or after a test plan, the simplest approach is just adding plain java code to it, or using your test framework (eg: JUnit) provided features for this purpose. Eg:

import static org.assertj.core.api.Assertions.assertThat;

import static us.abstracta.jmeter.javadsl.JmeterDsl.*;

import java.io.IOException;

import java.time.Duration;

import org.junit.jupiter.api.Test;

import org.junit.jupiter.api.BeforeEach;

import org.junit.jupiter.api.AfterEach;

import us.abstracta.jmeter.javadsl.core.TestPlanStats;

public class PerformanceTest {

@BeforeEach

public void setup() {

// my custom setup logic

}

@AfterEach

public void setup() {

// my custom setup logic

}

@Test

public void testPerformance() throws IOException {

TestPlanStats stats = testPlan(

threadGroup(2, 10,

httpSampler("http://my.service")

)

).run();

assertThat(stats.overall().sampleTimePercentile99()).isLessThan(Duration.ofSeconds(5));

}

}

But, in some cases you may need the logic to run inside the JMeter execution context (eg: set some JMeter properties), or, when the test plan runs at scale, to run in the same host where the test plan runs (for example to use some common file).

In such scenarios you can use provided setupThreadGroup & teardownThreadGroup like in the following example:

import static org.assertj.core.api.Assertions.assertThat;

import static us.abstracta.jmeter.javadsl.JmeterDsl.*;

import java.io.IOException;

import java.time.Duration;

import org.apache.jmeter.protocol.http.util.HTTPConstants;

import org.junit.jupiter.api.Test;

import us.abstracta.jmeter.javadsl.core.TestPlanStats;

public class PerformanceTest {

@Test

public void testPerformance() throws IOException {

TestPlanStats stats = testPlan(

setupThreadGroup(

httpSampler("http://my.service/tokens")

.method(HTTPConstants.POST)

.children(

jsr223PostProcessor("props.put('MY_TEST_TOKEN', prev.responseDataAsString)")

)

),

threadGroup(2, 10,

httpSampler("http://my.service/products")

.header("X-MY-TOKEN", "${__P(MY_TEST_TOKEN)}")

),

teardownThreadGroup(

httpSampler("http://my.service/tokens/${__P(MY_TEST_TOKEN)}")

.method(HTTPConstants.DELETE)

)

).run();

assertThat(stats.overall().sampleTimePercentile99()).isLessThan(Duration.ofSeconds(5));

}

}

TIP

By default, JMeter automatically executes teardown thread groups when a test plan stops due to an unscheduled event like a sample error when a stop test action is configured in a thread group, invocation of ctx.getEngine().askThreadsToStop() in jsr223 element, etc. You can disable this behavior by using the testPlan tearDownOnlyAfterMainThreadsDone method, which might be helpful if the teardown thread group has only to run on clean test plan completion.

Check DslSetupThreadGroup and DslTeardownThreadGroup for additional tips and details on the usage of these components.

Thread groups order

By default, when you add multiple thread groups to a test plan, JMeter will run them all in parallel. This is a very helpful behavior in many cases, but in some others, you may want to run them sequentially (one after the other). To achieve this you can just use sequentialThreadGroups() test plan method.

Test plan debugging

A usual requirement while building a test plan is to be able to review requests and responses and debug the test plan for potential issues in the configuration or behavior of the service under test. With jmeter-java-dsl you have several options for this purpose.

View results tree

One option is using provided resultsTreeVisualizer() like in the following example:

import static us.abstracta.jmeter.javadsl.JmeterDsl.*;

import java.io.IOException;

import org.junit.jupiter.api.Test;

public class PerformanceTest {

@Test

public void testPerformance() throws IOException {

testPlan(

threadGroup(1, 1,

httpSampler("http://my.service")

),

resultsTreeVisualizer()

).run();

}

}

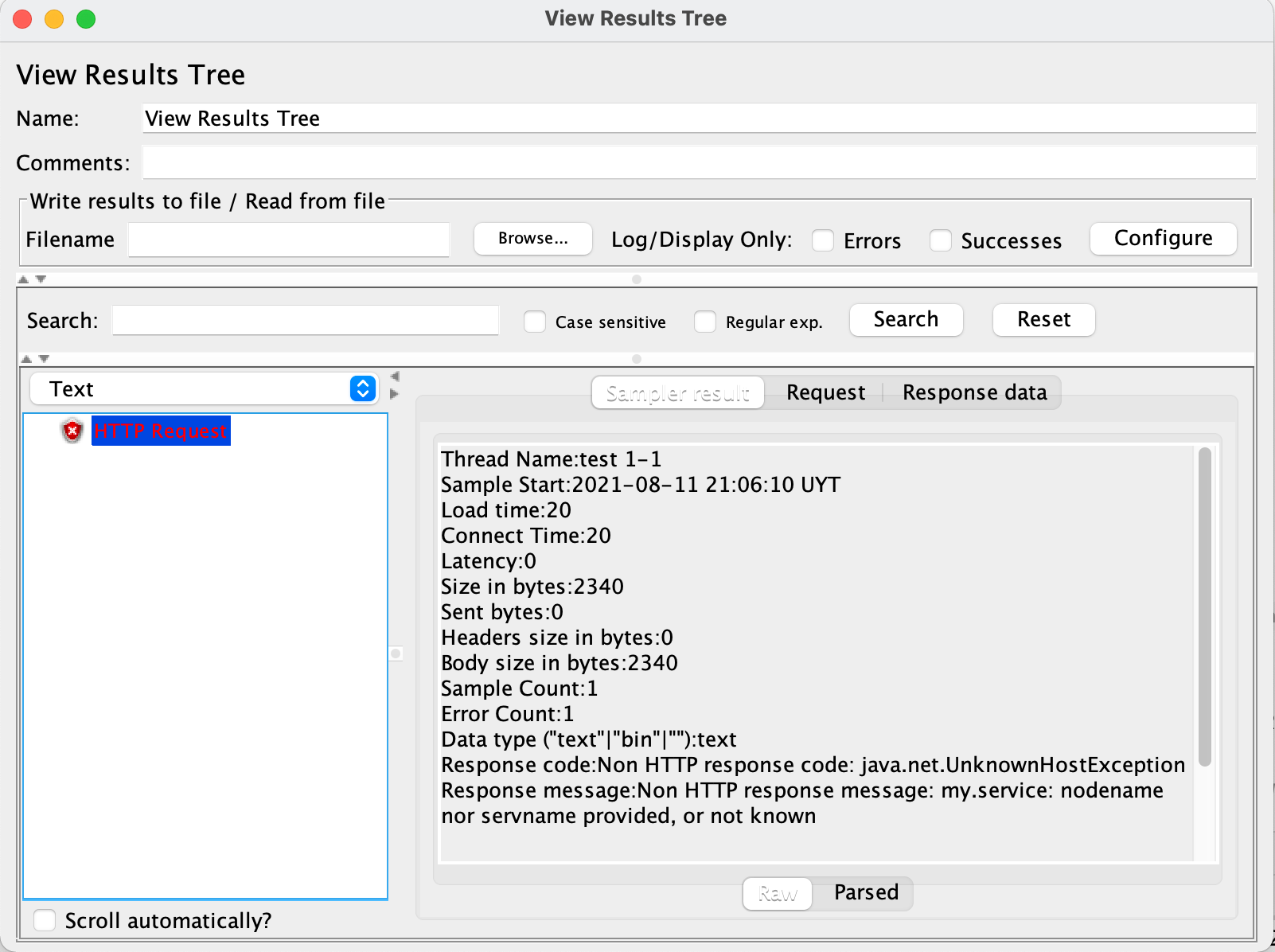

This will display the JMeter built-in View Results Tree element, which allows you to review request and response contents in addition to collected metrics (spent time, sent & received bytes, etc.) for each request sent to the server, in a window like this one:

TIP

To debug test plans use a few iterations and threads to reduce the execution time and ease tracing by having less information to analyze.

TIP

When adding resultsTreeVisualizer() as a child of a thread group, it will only display sample results of that thread group. When added as a child of a sampler, it will only show sample results for that sampler. You can use this to only review certain sample results in your test plan.

TIP

Remove resultsTreeVisualizer() from test plans when are no longer needed (when debugging is finished). Leaving them might interfere with unattended test plan execution (eg: in CI) due to test plan execution not finishing until all visualizers windows are closed.

WARNING

By default, View Results Tree only displays the last 500 sample results. If you need to display more elements, use provided resultsLimit(int) method which allows changing this value. Take into consideration that the more results are shown, the more memory that will require. So use this setting with care.

Post-processor breakpoints

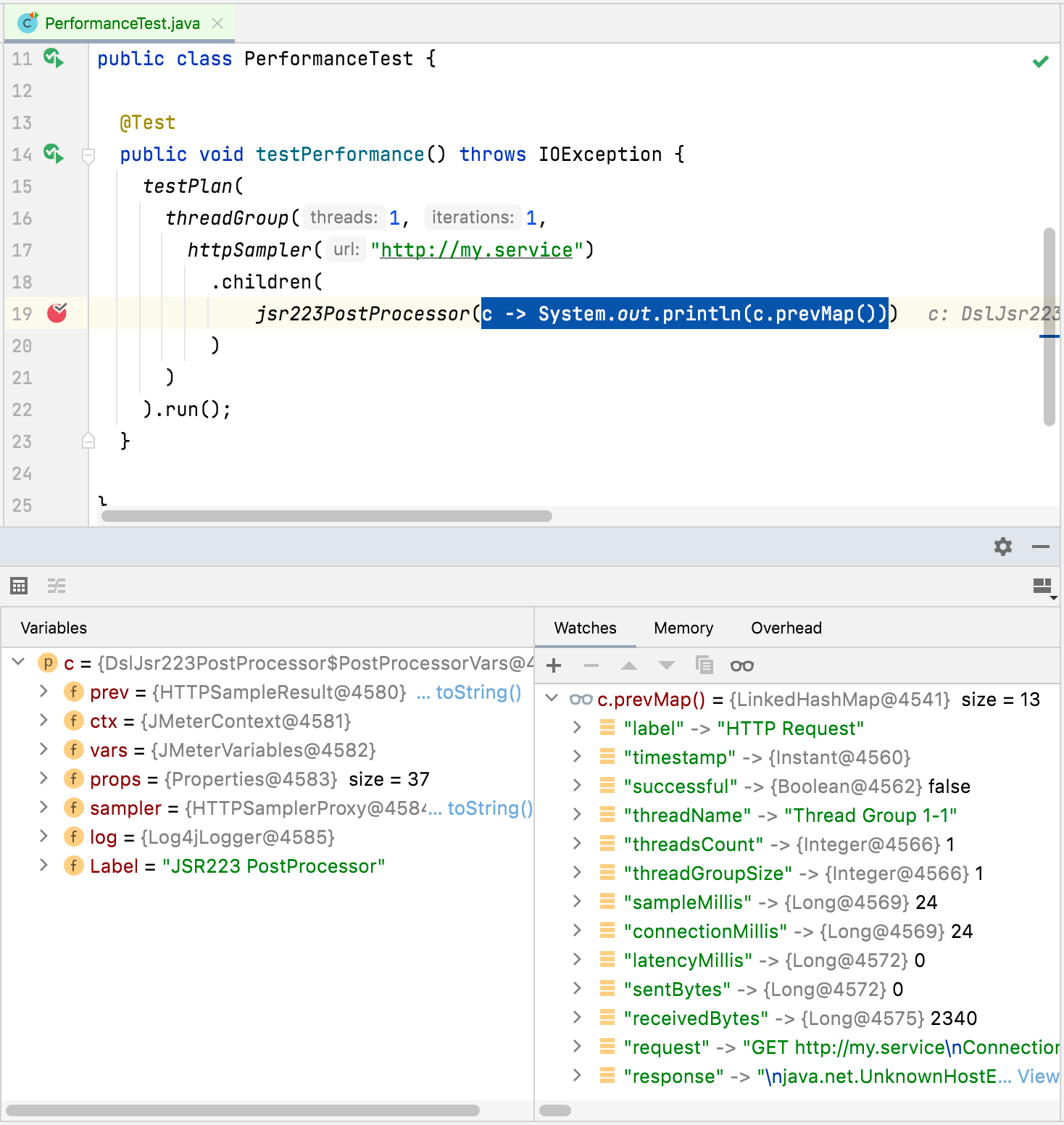

Another alternative is using IDE's built-in debugger by adding a jsr223PostProcessor with java code and adding a breakpoint to the post-processor code. This does not only allow checking sample result information but also JMeter variables and properties values and sampler properties.

Here is an example screenshot using this approach while debugging with an IDE:

TIP

DSL provides following methods to ease results and variables visualization and debugging: varsMap(), prevMap(), prevMetadata(), prevMetrics(), prevRequest(), prevResponse(). Check PostProcessorVars and Jsr223ScriptVars for more details.

TIP

Remove such post processors when no longer needed (when debugging is finished). Leaving them would generate errors when loading generated JMX test plan or running the test plan in BlazeMeter, OctoPerf or Azure, in addition to unnecessary processing time and resource usage.

Debug info during test plan execution

Another option that allows collecting debugging information during a test plan execution without affecting test plan execution (doesn't stop the test plan on each breakpoint as IDE debugger does, which will affect test plan collected metrics) and allows analyzing information after test plan execution, is using debugPostProcessor which adds a sub result to sampler results including debug information.

Here is an example that collects JMeter variables that can be reviewed with included resultsTreeVisualizer:

import static us.abstracta.jmeter.javadsl.JmeterDsl.*;

import java.io.IOException;

import org.junit.jupiter.api.Test;

public class PerformanceTest {

@Test

public void testPerformance() throws IOException {

String userIdVarName = "USER_ID";

String usersPath = "/users";

testPlan(

httpDefaults().url("http://my.service"),

threadGroup(1, 1,

httpSampler(usersPath)

.children(

jsonExtractor(userIdVarName, "[].id"),

debugPostProcessor()

),

httpSampler(usersPath + "/${" + userIdVarName + "}")

),

resultsTreeVisualizer()

).run();

}

}

This approach is particularly helpful when debugging extractors, allowing you to see what JMeter variables were or were not generated by previous extractors.

In general, prefer using Post processor with IDE debugger breakpoint in the initial stages of test plan development, testing with just 1 thread in thread groups, and using this later approach when trying to debug issues that are reproducible only in multiple threads executions or in a particular environment that requires offline analysis (analyze collected information after test plan execution).

TIP

Use this element in combination with resultsTreeVisualizer to review live executions, or use jtlWriter with withAllFields() or saveAsXml(true) and saveResponseData(true) to generate a jtl file for later analysis.

TIP

By default, debugPostProcessor will only include JMeter variables in generated sub sampler, which covers the most used case and keeps memory and disk usage low. debugPostProcessor includes additional methods that allow including other information like sampler properties, JMeter properties, and system properties. Check DslDebugPostProcessor for more details.

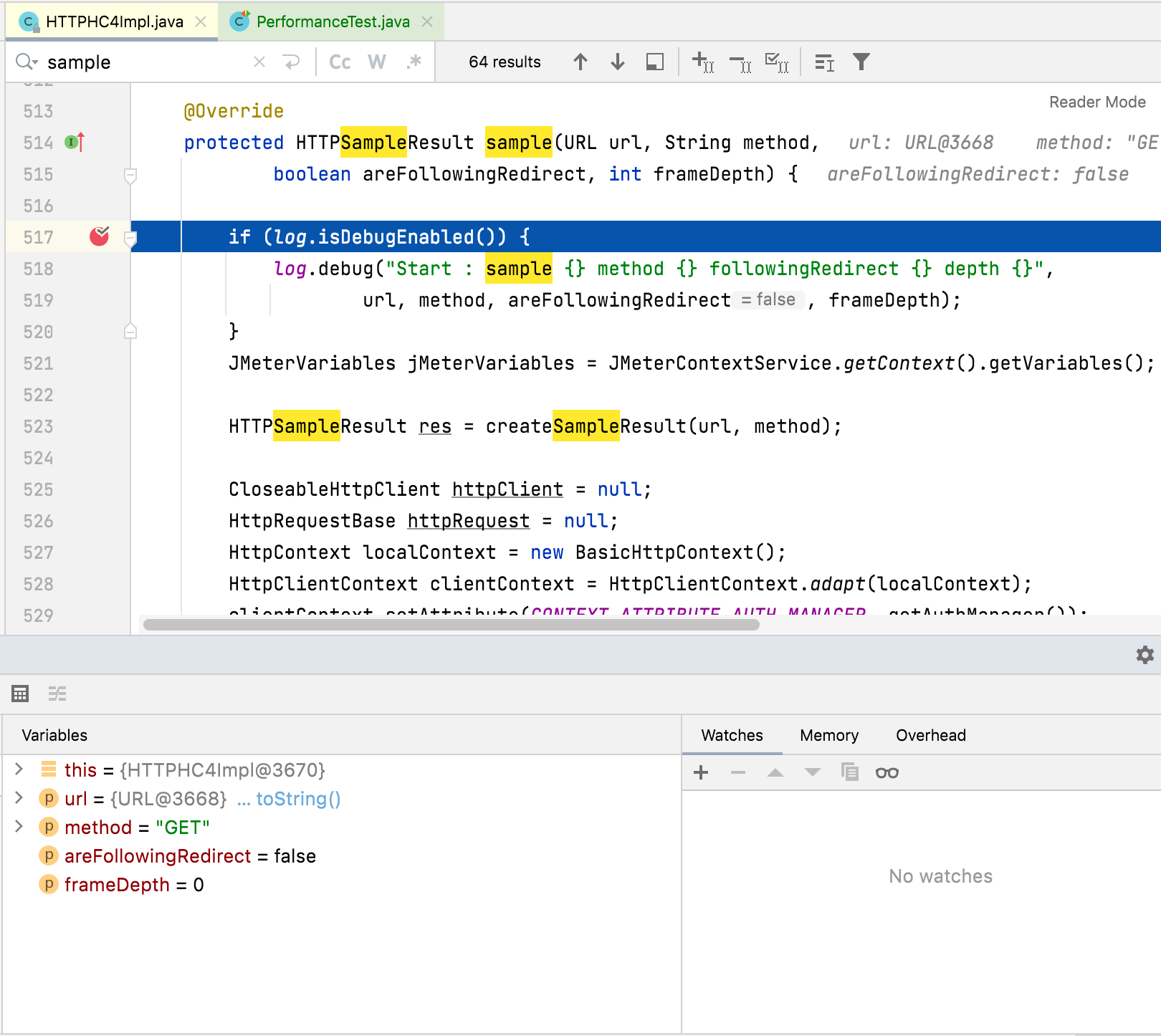

Debug JMeter code

You can even add breakpoints to JMeter code in your IDE and debug the code line by line providing the greatest possible detail.

Here is an example screenshot debugging HTTP Sampler:

TIP

JMeter class in charge of executing threads logic is org.apache.jmeter.threads.JMeterThread. You can check the classes used by each DSL-provided test element by checking the DSL code.

Debug Groovy code

In some cases, you may want to debug some Groovy script used in some sampler, pre-, or post-processor. For such scenarios, you can check here where we list some options.

Dummy sampler

In many cases you want to be able to test part of the test plan but without directly interacting with the service under test, avoiding any potential traffic to the servers, testing some border cases which might be difficult to reproduce with the actual server, and avoid actual server interactions variability and potential unpredictability. In such scenarios, you might replace actual samplers with dummySampler (which uses Dummy Sampler plugin) to be able to test extractors, assertions, controllers conditions, and other parts of the test plan under certain conditions/results generated by the samplers.

Here is an example:

import static us.abstracta.jmeter.javadsl.JmeterDsl.*;

import java.io.IOException;

import org.junit.jupiter.api.Test;

public class PerformanceTest {

@Test

public void testPerformance() throws IOException {

String usersIdVarName = "USER_IDS";

String userIdVarName = "USER_ID";

String usersPath = "/users";

testPlan(

httpDefaults().url("http://my.service"),

threadGroup(1, 1,

// httpSampler(usersPath)

dummySampler("[{\"id\": 1, \"name\": \"John\"}, {\"id\": 2, \"name\": \"Jane\"}]")

.children(

jsonExtractor(usersIdVarName, "[].id")

.matchNumber(-1)

),

forEachController(usersIdVarName, userIdVarName,

// httpSampler(usersPath + "/${" + userIdVarName + "}")

dummySampler("{\"name\": \"John or Jane\"}")

.url("http://my.service/" + usersPath + "/${" + userIdVarName + "}")

)

),

resultsTreeVisualizer()

).run();

}

}

TIP

The DSL configures dummy samplers by default, in contrast to what JMeter does, with response time simulation disabled. This allows to speed up the debugging process, not having to wait for proper response time simulation (sleeps/waits). If you want a more accurate emulation, you might turn it on through responseTimeSimulation() method.

Check DslDummySampler for more information o additional configuration and options.

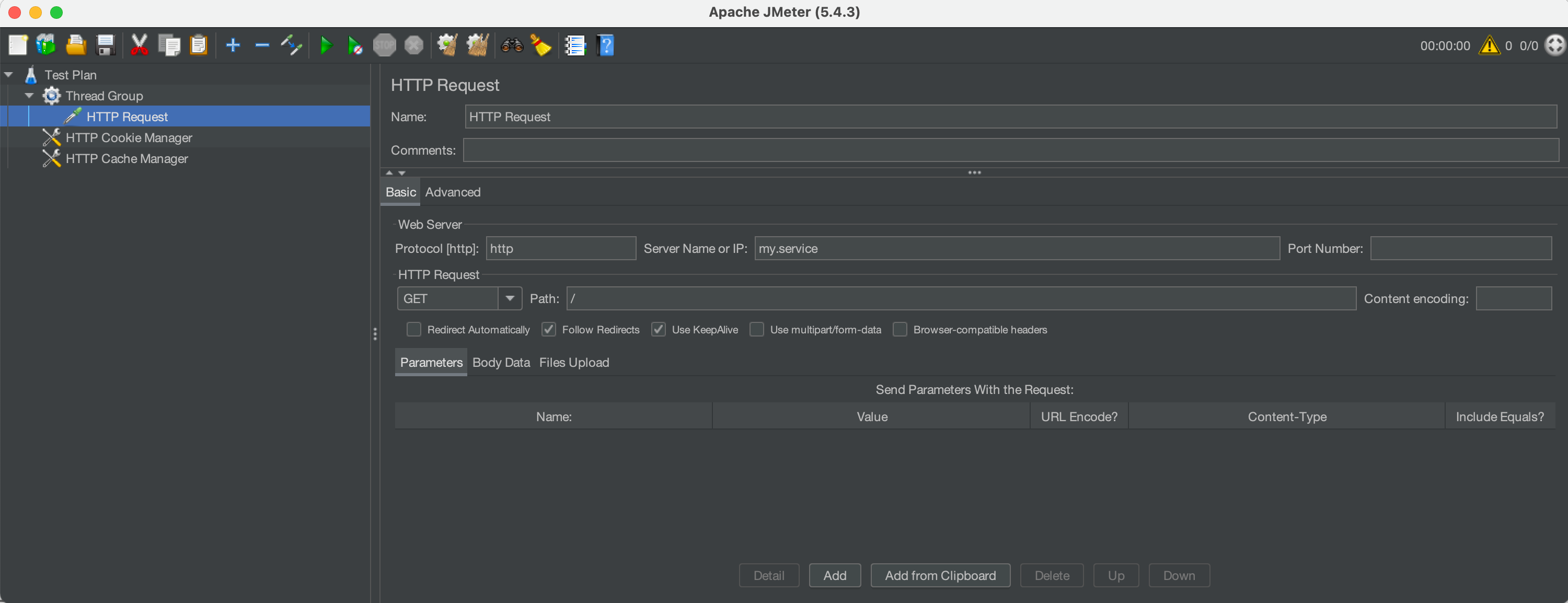

Test plan review in JMeter GUI

A usual requirement for new DSL users that are used to Jmeter GUI, is to be able to review Jmeter DSL generated test plan in the familiar JMeter GUI. For this, you can use showInGui() method in a test plan to open JMeter GUI with the preloaded test plan.

This can be also used to debug the test plan, by adding elements (like view results tree, dummy samplers, debug post-processors, etc.) in the GUI and running the test plan.

Here is a simple example using the method:

import static us.abstracta.jmeter.javadsl.JmeterDsl.*;

import java.io.IOException;

import org.junit.jupiter.api.Test;

public class PerformanceTest {

@Test

public void testPerformance() throws IOException {

testPlan(

threadGroup(2, 10,

httpSampler("http://my.service")

)

).showInGui();

}

}

Which ends up opening a window like this one:

Reporting

Once you have a test plan you would usually want to be able to analyze the collected information. This section contains several ways to achieve this.

Log requests and responses

The main mechanism provided by JMeter (and jmeter-java-dsl) to get information about generated requests, responses, and associated metrics is through the generation of JTL files.

This can be easily achieved in jmeter-java-dsl by using provided jtlWriter like in this example:

import static org.assertj.core.api.Assertions.assertThat;

import static us.abstracta.jmeter.javadsl.JmeterDsl.*;

import java.io.IOException;

import java.time.Duration;

import java.time.Instant;

import org.junit.jupiter.api.Test;

import us.abstracta.jmeter.javadsl.core.TestPlanStats;

public class PerformanceTest {

@Test

public void testPerformance() throws IOException {

TestPlanStats stats = testPlan(

threadGroup(2, 10,

httpSampler("http://my.service")

),

jtlWriter("target/jtls")

).run();

assertThat(stats.overall().sampleTimePercentile99()).isLessThan(Duration.ofSeconds(5));

}

}

TIP

By default, jtlWriter will write the most used information to evaluate the performance of the tested service. If you want to trace all the information of each request you may use jtlWriter with withAllFields() option. Doing this will provide all the information at the cost of additional computation and resource usage (fewer resources for actual load testing). You can tune which fields to include or not with jtlWriter and only log what you need, check JtlWriter for more details.

TIP

By default, jtlWriter will log every sample result, but in some cases you might want to log additional info when a sample result fails. In such scenarios you can use two jtlWriter instances like in this example:

testPlan(

threadGroup(2, 10,

httpSampler("http://my.service")

),

jtlWriter("target/jtls/success")

.logOnly(SampleStatus.SUCCESS),

jtlWriter("target/jtls/error")

.logOnly(SampleStatus.ERROR)

.withAllFields(true)

)

TIP

jtlWriter will automatically generate .jtl files applying this format: <yyyy-MM-dd HH-mm-ss> <UUID>.jtl.

If you need a specific file name, for example for later postprocessing logic (eg: using CI build ID), you can specify it by using jtlWriter(directory, fileName).

When specifying the file name, make sure to use unique names, otherwise, the JTL contents may be appended to previous existing jtl files.

An additional option, specially targeted towards logging sample responses, is responseFileSaver which automatically generates a file for each received response. Here is an example:

import static org.assertj.core.api.Assertions.assertThat;

import static us.abstracta.jmeter.javadsl.JmeterDsl.*;

import java.io.IOException;

import java.time.Duration;

import java.time.Instant;

import org.junit.jupiter.api.Test;

import us.abstracta.jmeter.javadsl.core.TestPlanStats;

public class PerformanceTest {

@Test

public void testPerformance() throws IOException {

TestPlanStats stats = testPlan(

threadGroup(2, 10,

httpSampler("http://my.service")

),

responseFileSaver(Instant.now().toString().replace(":", "-") + "-response")

).run();

assertThat(stats.overall().sampleTimePercentile99()).isLessThan(Duration.ofSeconds(5));

}

}

Check ResponseFileSaver for more details.

Finally, if you have more specific needs that are not covered by previous examples, you can use jsr223PostProcessor to define your own custom logic like this:

import static org.assertj.core.api.Assertions.assertThat;

import static us.abstracta.jmeter.javadsl.JmeterDsl.*;

import java.io.IOException;

import java.time.Duration;

import java.time.Instant;

import org.junit.jupiter.api.Test;

import us.abstracta.jmeter.javadsl.core.TestPlanStats;

public class PerformanceTest {

@Test

public void testPerformance() throws IOException {

TestPlanStats stats = testPlan(

threadGroup(2, 10,

httpSampler("http://my.service")

.children(jsr223PostProcessor(

"new File('traceFile') << \"${prev.sampleLabel}>>${prev.responseDataAsString}\\n\""))

)

).run();

assertThat(stats.overall().sampleTimePercentile99()).isLessThan(Duration.ofSeconds(5));

}

}

Check DslJsr223PostProcessor for more details.

Real-time metrics visualization and historic data storage

When running tests with JMeter (and in particular with jmeter-java-dsl) a usual requirement is to be able to store such test runs in a persistent database to, later on, review such metrics, and compare different test runs. Additionally, jmeter-java-dsl only provides some summary data of a test run in the console while it is running, but, since it doesn't provide any sort of UI, this doesn't allow you to easily analyze such information as it can be done in JMeter GUI.

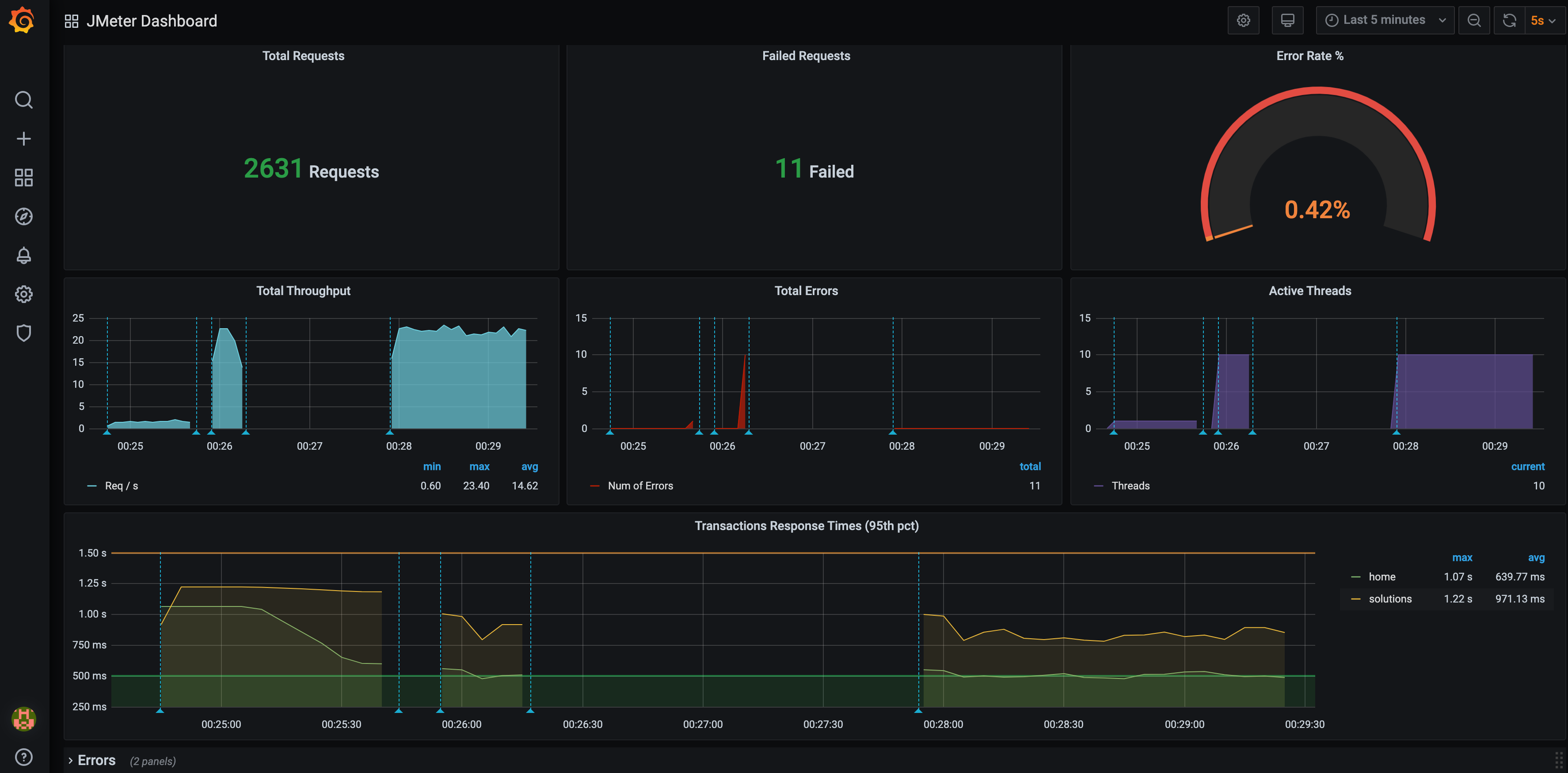

InfluxDB

To overcome these limitations you can use provided support for publishing JMeter test run metrics to InfluxDB or Elasticsearch, which allows keeping a record of all run statistics and, through Grafana, get some nice dashboards like the following one:

This can be easily done using influxDbListener, an existing InfluxDB & Grafana server, and using a dashboard like this one.

Here is an example test plan:

import static org.assertj.core.api.Assertions.assertThat;

import static us.abstracta.jmeter.javadsl.JmeterDsl.*;

import java.io.IOException;

import java.time.Duration;

import org.junit.jupiter.api.Test;

import us.abstracta.jmeter.javadsl.core.TestPlanStats;

public class PerformanceTest {

@Test

public void testPerformance() throws IOException {

TestPlanStats stats = testPlan(

threadGroup(2, 10,

httpSampler("http://my.service")

),

influxDbListener("http://localhost:8086/write?db=jmeter")

).run();

assertThat(stats.overall().sampleTimePercentile99()).isLessThan(Duration.ofSeconds(5));

}

}

If you want to try it locally, you can run docker-compose up (previously installing Docker in your machine) inside this directory. After containers are started, you can open Grafana at http://localhost:3000. Finally, run a performance test using the influxDbListener and you will be able to see the live results, and keep historic data. Cool, isn't it?!

WARNING

Use the provided docker-compose settings for local tests only. It uses weak credentials and is not properly configured for production purposes.

Check InfluxDbBackendListener for additional details and settings.

Graphite

In a similar fashion to InfluxDB, you can use Graphite and Grafana. Here is an example test plan using the graphiteListener:

import static org.assertj.core.api.Assertions.assertThat;

import static us.abstracta.jmeter.javadsl.JmeterDsl.*;

import java.io.IOException;

import java.time.Duration;

import org.junit.jupiter.api.Test;

import us.abstracta.jmeter.javadsl.core.TestPlanStats;

public class PerformanceTest {

@Test

public void testPerformance() throws IOException {

TestPlanStats stats = testPlan(

threadGroup(2, 10,

httpSampler("http://my.service")

),

graphiteListener("localhost:2004")

).run();

assertThat(stats.overall().sampleTimePercentile99()).isLessThan(Duration.ofSeconds(5));

}

}

As in the InfluxDB scenario, you can try it locally by running docker-compose up (previously installing Docker in your machine) inside this directory. After containers are started, you can follow the same steps as in the InfluxDB scenario.

WARNING

Use the provided docker-compose settings for local tests only. It uses weak credentials and is not properly configured for production purposes.

WARNING

graphiteListener is configured to use Pickle Protocol, and port 2004, by default. This is more efficient than text plain protocol, which is the one used by default by JMeter.

Elasticsearch

Another alternative is using provided jmeter-java-dsl-elasticsearch-listener module with Elasticsearch and Grafana servers using a dashboard like this one.

To use the module, you will need to include the following dependency in your project:

<dependency>

<groupId>us.abstracta.jmeter</groupId>

<artifactId>jmeter-java-dsl-elasticsearch-listener</artifactId>

<version>1.29.1</version>

<scope>test</scope>

</dependency>

repositories {

...

maven { url 'https://jitpack.io' }

}

dependencies {

...

testImplementation 'us.abstracta.jmeter:jmeter-java-dsl-elasticsearch-listener:1.29.1'

}

And use provided elasticsearchListener() method like in this example:

import static org.assertj.core.api.Assertions.assertThat;

import static us.abstracta.jmeter.javadsl.JmeterDsl.*;

import static us.abstracta.jmeter.javadsl.elasticsearch.listener.ElasticsearchBackendListener.*;

import java.io.IOException;

import java.time.Duration;

import org.junit.jupiter.api.Test;

import us.abstracta.jmeter.javadsl.core.TestPlanStats;

public class PerformanceTest {

@Test

public void testPerformance() throws IOException {

TestPlanStats stats = testPlan(

threadGroup(2, 10,

httpSampler("http://my.service")

),

elasticsearchListener("http://localhost:9200/jmeter")

).run();

assertThat(stats.overall().sampleTimePercentile99()).isLessThan(Duration.ofSeconds(5));

}

}

WARNING

This module uses this JMeter plugin which, at its current version, has performance and dependency issues that might affect your project. This and this pull requests fix those issues, but until they are merged and released, you might face such issues.

In the same fashion as InfluxDB, if you want to try it locally, you can run docker-compose up inside this directory and follow similar steps as described for InfluxDB to visualize live metrics in Grafana.

WARNING

Use provided docker-compose settings for local tests only. It uses weak or no credentials and is not properly configured for production purposes.

Check ElasticsearchBackendListener for additional details and settings.

Prometheus

As in previous scenarios, you can also use Prometheus and Grafana.

To use the module, you will need to include the following dependency in your project:

<dependency>

<groupId>us.abstracta.jmeter</groupId>

<artifactId>jmeter-java-dsl-prometheus</artifactId>

<version>1.29.1</version>

<scope>test</scope>

</dependency>

testImplementation 'us.abstracta.jmeter:jmeter-java-dsl-prometheus:1.29.1'

And use provided prometheusListener() method like in this example:

import static org.assertj.core.api.Assertions.assertThat;

import static us.abstracta.jmeter.javadsl.JmeterDsl.*;

import static us.abstracta.jmeter.javadsl.prometheus.DslPrometheusListener.*;

import java.io.IOException;

import java.time.Duration;

import org.junit.jupiter.api.Test;

import us.abstracta.jmeter.javadsl.core.TestPlanStats;

public class PerformanceTest {

@Test

public void testPerformance() throws IOException {

TestPlanStats stats = testPlan(

threadGroup(2, 10,

httpSampler("http://my.service")

),

prometheusListener()

).run();

assertThat(stats.overall().sampleTimePercentile99()).isLessThan(Duration.ofSeconds(5));

}

}

As in previous cases, you can to try it locally by running docker-compose up inside this directory. After containers are started, you can follow the same steps as in previous scenarios.

WARNING

Use the provided docker-compose settings for local tests only. It uses weak credentials and is not properly configured for production purposes.

Check DslPrometheusListener for details on listener settings.

Here is an example that shows the default settings used by prometheusListener:

import us.abstracta.jmeter.javadsl.prometheus.DslPrometheusListener.PrometheusMetric;

...

prometheusListener()

.metrics(

PrometheusMetric.responseTime("ResponseTime", "the response time of samplers")

.labels(PrometheusMetric.SAMPLE_LABEL, PrometheusMetric.RESPONSE_CODE)

.quantile(0.75, 0.5)

.quantile(0.95, 0.1)

.quantile(0.99, 0.01)

.maxAge(Duration.ofMinutes(1)),

PrometheusMetric.successRatio("Ratio", "the success ratio of samplers")

.labels(PrometheusMetric.SAMPLE_LABEL, PrometheusMetric.RESPONSE_CODE)

)

.port(9270)

.host("0.0.0.0")

.endWait(Duration.ofSeconds(10))

...

Note that the default settings are different from the used JMeter Prometheus Plugin, to allow easier usage and avoid missing metrics at the end of test plan execution.

TIP

When configuring the prometheusListener always consider setting a endWait that is greater thant the Prometheus Server configured scrape_interval to avoid missing metrics at the end of test plan execution (e.g.: 2x the scrape interval value).

DataDog

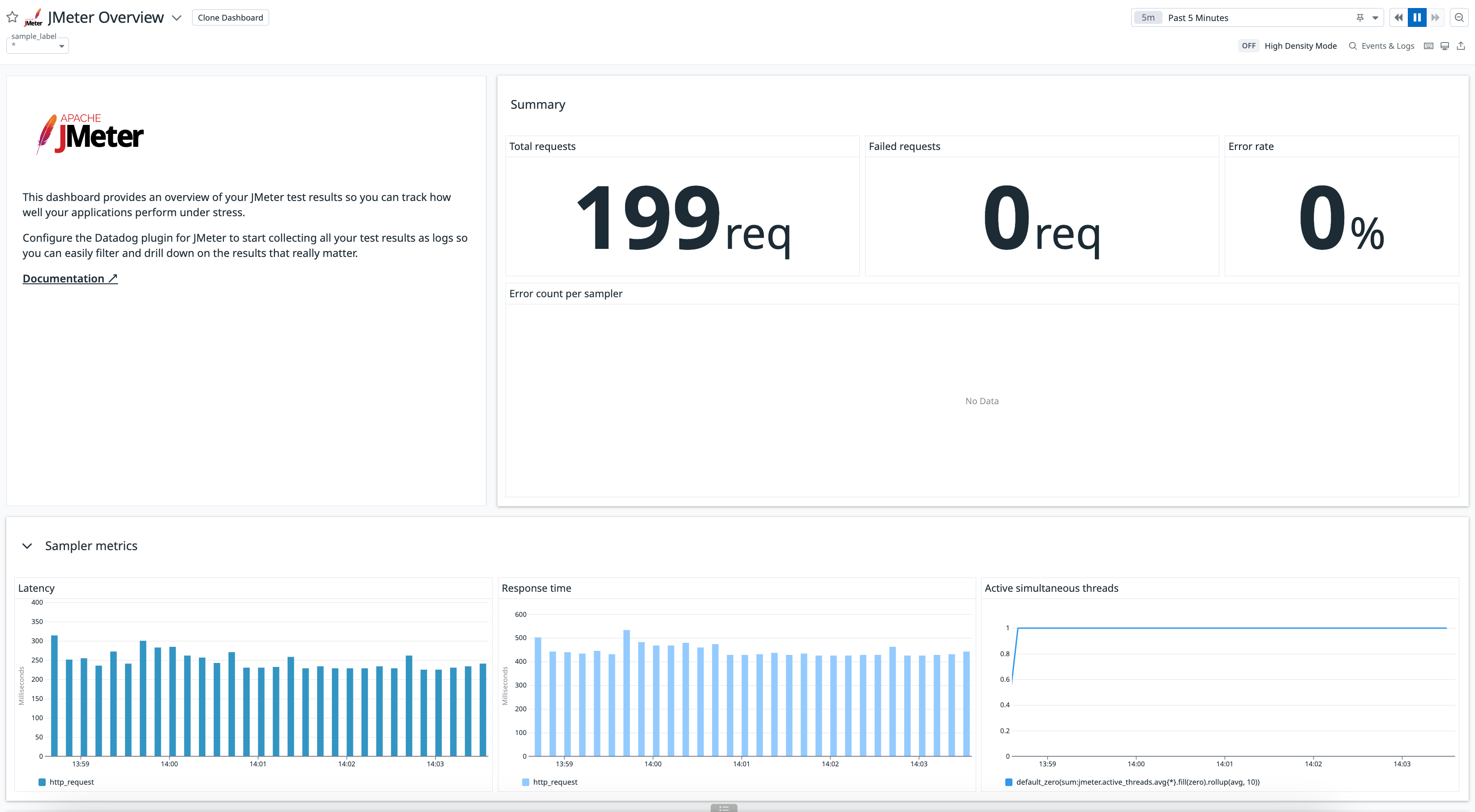

Another option is using jmeter-java-dsl-datadog module which uses existing jmeter-datadog-backend-listener plugin to upload metrics to datadog which you can easily visualize and analize with DataDog provided JMeter dashboard. Here is an example of what you get:

To use the module, just include the dependency:

<dependency>

<groupId>us.abstracta.jmeter</groupId>

<artifactId>jmeter-java-dsl-datadog</artifactId>

<version>1.29.1</version>

<scope>test</scope>

</dependency>

repositories {

...

maven { url 'https://jitpack.io' }

}

dependencies {

...

testImplementation 'us.abstracta.jmeter:jmeter-java-dsl-datadog:1.29.1'

}

And use provided datadogListener() method like in this example:

import static org.assertj.core.api.Assertions.assertThat;

import static us.abstracta.jmeter.javadsl.JmeterDsl.*;

import static us.abstracta.jmeter.javadsl.datadog.DatadogBackendListener.*;

import java.io.IOException;

import java.time.Duration;

import org.junit.jupiter.api.Test;

import us.abstracta.jmeter.javadsl.core.TestPlanStats;

public class PerformanceTest {

@Test

public void testPerformance() throws IOException {

TestPlanStats stats = testPlan(

threadGroup(2, 10,

httpSampler("http://my.service")

),

datadogBackendListener(System.getenv("DATADOG_APIKEY"))

).run();

assertThat(stats.overall().sampleTimePercentile99()).isLessThan(Duration.ofSeconds(5));

}

}

TIP

If you use a DataDog instance in a site different than US1 (the default one), you can use .site(DatadogSite) method to select the proper site.

TIP

You can use .resultsLogs(true) to send results samples as logs to DataDog to get more information in DataDog on each sample of the test plan (for example for tracing). Enabling this property requires additional network traffic, that may affect test plan execution, and costs on DataDog, so use it sparingly.

TIP

You can use .tags() to add additional information to metrics sent to DataDog. Check DataDog documentation for more details.

Generate HTML reports from test plan execution

After running a test plan you would usually like to visualize the results in a friendly way that eases the analysis of collected information.

One, and preferred way, to do that is through previously mentioned alternatives.

Another way might just be using previously introduced jtlWriter and then loading the jtl file in JMeter GUI with one of JMeter provided listeners (like view results tree, summary report, etc.).

Another alternative is generating a standalone report for the test plan execution using jmeter-java-dsl provided htmlReporter like this:

import static org.assertj.core.api.Assertions.assertThat;

import static us.abstracta.jmeter.javadsl.JmeterDsl.*;

import java.io.IOException;

import java.time.Duration;

import java.time.Instant;

import org.junit.jupiter.api.Test;

import us.abstracta.jmeter.javadsl.core.TestPlanStats;

public class PerformanceTest {

@Test

public void testPerformance() throws IOException {

TestPlanStats stats = testPlan(

threadGroup(2, 10,

httpSampler("http://my.service")

),

htmlReporter("reports")

).run();

assertThat(stats.overall().sampleTimePercentile99()).isLessThan(Duration.ofSeconds(5));

}

}

WARNING

htmlReporter will create one directory for each generated report by applying the following template: <yyyy-MM-dd HH-mm-ss> <UUID>.

If you need a particular name for the report directory, for example for postprocessing logic (eg: adding CI build ID), you can use htmlReporter(reportsDirectory, name) to specify the name.

Make sure when specifying the name, for it to be unique, otherwise report generation will fail after test plan execution.

TIP

Time graphs by default group metrics per minute, but you can change this with provided timeGraphsGranularity method.

Live built-in graphs and stats

Sometimes you want to get live statistics on the test plan and don't want to install additional tools, and are not concerned about keeping historic data.

You can use dashboardVisualizer to get live charts and stats for quick review.

To use it, you need to add the following dependency:

<dependency>

<groupId>us.abstracta.jmeter</groupId>

<artifactId>jmeter-java-dsl-dashboard</artifactId>

<version>1.29.1</version>

<scope>test</scope>

</dependency>

testImplementation 'us.abstracta.jmeter:jmeter-java-dsl-dashboard:1.29.1'

And use it as you would with any of the previously mentioned listeners (like influxDbListener and jtlWriter).

Here is an example:

import static org.assertj.core.api.Assertions.assertThat;

import static us.abstracta.jmeter.javadsl.JmeterDsl.*;

import static us.abstracta.jmeter.javadsl.dashboard.DashboardVisualizer.*;

import java.io.IOException;

import java.time.Duration;

import org.junit.jupiter.api.Test;

import us.abstracta.jmeter.javadsl.core.TestPlanStats;

public class PerformanceTest {

@Test

public void testPerformance() throws IOException {

TestPlanStats stats = testPlan(

threadGroup("Group1")

.rampToAndHold(10, Duration.ofSeconds(10), Duration.ofSeconds(10))

.children(

httpSampler("Sample 1", "http://my.service")

),

threadGroup("Group2")

.rampToAndHold(20, Duration.ofSeconds(10), Duration.ofSeconds(20))

.children(

httpSampler("Sample 2", "http://my.service/get")

),

dashboardVisualizer()

).run();

assertThat(stats.overall().sampleTimePercentile99()).isLessThan(Duration.ofSeconds(5));

}

}

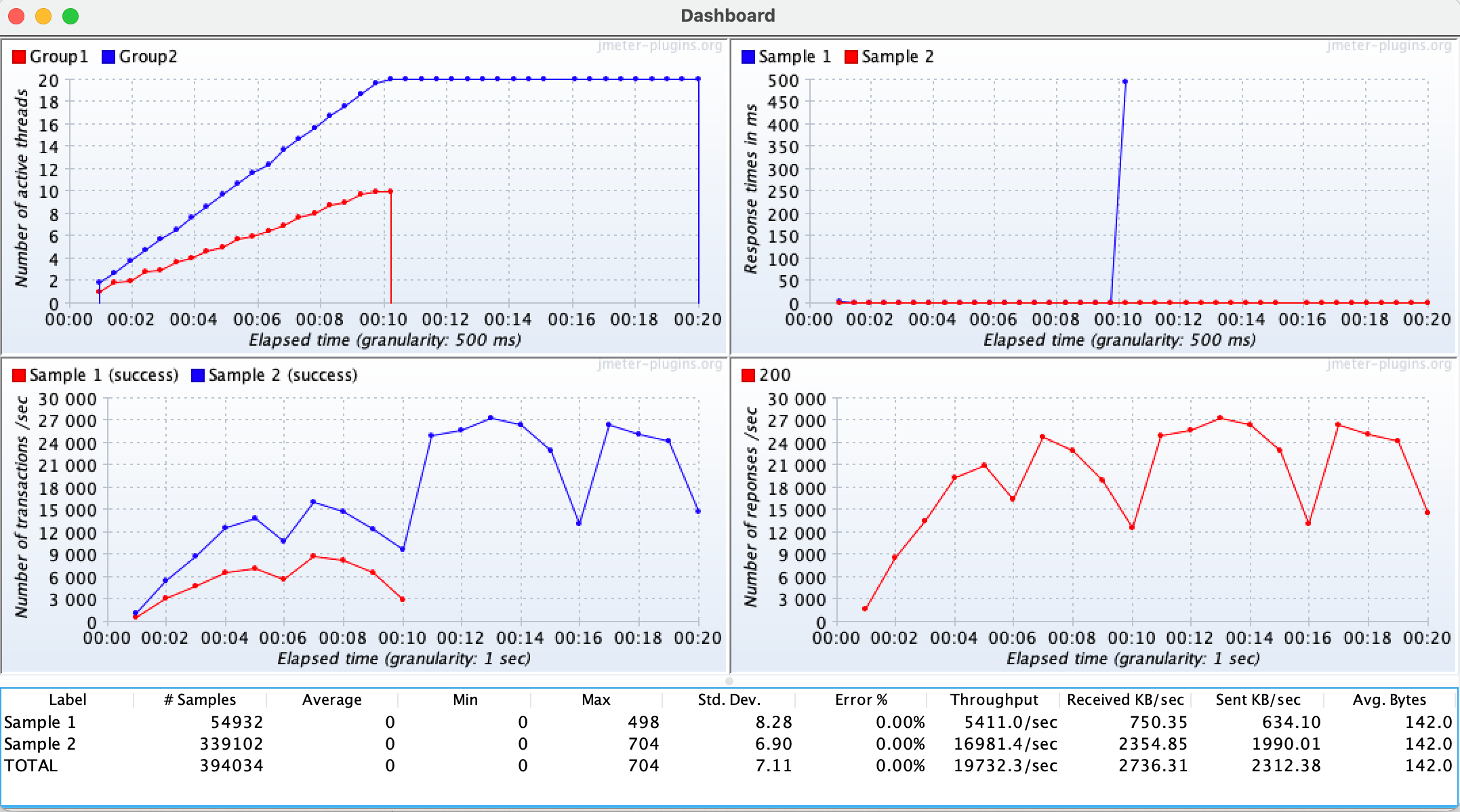

The dashboardVisualizer will pop up a window like the following one, which you can use to trace statistics while the test plan runs:

WARNING

The dashboard imposes additional resources (CPU & RAM) consumption on the machine generating the load test, which may affect the test plan execution and reduce the number of concurrent threads you may reach in your machine. In general, prefer using one of the previously mentioned methods and using the dashboard just for local testing and quick feedback.

Remember to remove it when is no longer needed in the test plan

WARNING

The test will not end until you close all popup windows. This allows you to see the final charts and statistics of the plan before ending the test.

TIP

As with jtlWriter and influxDbListener, you can place dashboardVisualizer at different levels of the test plan (at the test plan level, at the thread group level, as a child of a sampler, etc.), to only capture statistics of that particular part of the test plan.

Response processing

Check for expected response

By default, JMeter marks any HTTP request with a fail response code (4xx or 5xx) as failed, which allows you to easily identify when some request unexpectedly fails. But in many cases, this is not enough or desirable, and you need to check for the response body (or some other field) to contain (or not) a certain string.

This is usually accomplished in JMeter with the usage of Response Assertions, which provides an easy and fast way to verify that you get the proper response for each step of the test plan, marking the request as a failure when the specified condition is not met.

Here is an example of how to specify a response assertion in jmeter-java-dsl:

import static org.assertj.core.api.Assertions.assertThat;

import static us.abstracta.jmeter.javadsl.JmeterDsl.*;

import java.io.IOException;

import java.time.Duration;

import org.junit.jupiter.api.Test;

import us.abstracta.jmeter.javadsl.core.TestPlanStats;

public class PerformanceTest {

@Test

public void testPerformance() throws IOException {

TestPlanStats stats = testPlan(

threadGroup(2, 10,

httpSampler("http://my.service")

.children(

responseAssertion().containsSubstrings("OK")

)

)

).run();

assertThat(stats.overall().sampleTimePercentile99()).isLessThan(Duration.ofSeconds(5));

}

}

Check Response Assertion for more details and additional options.

For more complex scenarios check following section.

Check for expected JSON

When checking for JSON responses, it is usually easier to just use jsonAssertion. Here is an example:

import static org.assertj.core.api.Assertions.assertThat;

import static us.abstracta.jmeter.javadsl.JmeterDsl.*;

import java.io.IOException;

import java.time.Duration;

import org.apache.http.entity.ContentType;

import org.junit.jupiter.api.Test;

import us.abstracta.jmeter.javadsl.core.TestPlanStats;

public class PerformanceTest {

@Test

public void testPerformance() throws IOException {

TestPlanStats stats = testPlan(

threadGroup(2, 10,

httpSampler("http://my.service/accounts")

.post("{\"name\": \"John Doe\"}", ContentType.APPLICATION_JSON)

.children(

jsonAssertion("id")

),

httpSampler("http://my.service/accounts/${ACCOUNT_ID}")

)

).run();

assertThat(stats.overall().sampleTimePercentile99()).isLessThan(Duration.ofSeconds(5));

}

}

TIP

Previous example just checks that sample result JSON contains an id field. You can use matches(regex), equalsTo(value) or even equalsToJson(json) methods to check id associated value. Additionally, you can use the not() method to check for the inverse condition. E.g.: does not contain id field, or field value does not match a given regular expression or is not equal to a given value.

TIP

By default this element uses JMeter JSON JMESPath Assertion element, and in consequence, JMESPath as query language.

If you want to use JMeter JSON Assertion element, and in consequence JSONPath as the query language, you can simply use .queryLanguage(JsonQueryLanguage.JSON_PATH) and a JSONPath query.

Change sample result statuses with custom logic

Sometimes response assertions and JMeter default behavior are not enough, and custom logic is required. In such scenarios you can use jsr223PostProcessor as in this example where the 429 status code is not considered as a fail status code:

import static org.assertj.core.api.Assertions.assertThat;

import static us.abstracta.jmeter.javadsl.JmeterDsl.*;

import java.io.IOException;

import java.time.Duration;

import org.junit.jupiter.api.Test;

import us.abstracta.jmeter.javadsl.core.TestPlanStats;

public class PerformanceTest {

@Test

public void testPerformance() throws IOException {

TestPlanStats stats = testPlan(

threadGroup(2, 10,

httpSampler("http://my.service")

.children(

jsr223PostProcessor(

"if (prev.responseCode == '429') { prev.successful = true }")

)

)

).run();

assertThat(stats.overall().sampleTimePercentile99()).isLessThan(Duration.ofSeconds(5));

}

}

You can also use a Java lambda instead of providing Groovy script, which benefits from Java type safety & IDEs code auto-completion and consumes less CPU:

jsr223PostProcessor(s -> {

if ("429".equals(s.prev.getResponseCode())) {

s.prev.setSuccessful(true);

}

})

WARNING

Even though using Java Lambdas has several benefits, they are also less portable. Check following section for more details.

Check DslJsr223PostProcessor for more details and additional options.

WARNING

JSR223PostProcessor is a very powerful tool but is not the only, nor the best, alternative for many cases where JMeter already provides a better and simpler alternative. For instance, the previous example might be implemented with previously presented Response Assertion.

Lambdas

As previously mentioned, using Java lambdas is in general more performant than using Groovy scripts (here are some comparisons) and are easier to develop and maintain due to type safety, IDE autocompletion, etc.

But, they are also less portable.

For instance, they will not work out of the box with remote engines (like BlazeMeterEngine) or while saving JMX and running it in standalone JMeter.

One option is using groovy scripts and __groovy function, but doing so, you lose the previously mentioned benefits.

Here is another approach to still benefit from Java code (vs Groovy script) and run in remote engines and standalone JMeter.

Lambdas in remote engine

Here are the steps to run test plans containing Java lambdas in BlazeMeterEngine:

Replace all Java lambdas with public static classes implementing proper script interface.

For example, if you have the following test:

public class PerformanceTest { @Test public void testPerformance() throws Exception { testPlan( threadGroup(2, 10, httpSampler("http://my.service") .children( jsr223PostProcessor(s -> { if ("429".equals(s.prev.getResponseCode())) { s.prev.setSuccessful(true); } }) ) ) ).runIn(new BlazeMeterEngine(System.getenv("BZ_TOKEN"))); } }You can change it to: